Mesopotamian Science and Technology - III

last update: 20 Nov. 2019

I have prepared three webpages.

This first webpage covers the history of writing and numbers (including cuneiforms) and astronomy (including lists of omens).

The second webpage covers the so-called “science of crafts” with cave painting, tool making (including stone tools), fire and pre-pottery technologies, weaving and textiles (ca. 60,000 BC), ceramic sculptures and pottery (starting ca. 29,000 BC), boats (ca. 10,000 BC), the wheel (ca. 6500 BC), and concludes with early patterns of trade and maps (ca, 12,000 BC).

The third webpage covers irrigation, and metallurgy of the Copper, Bronze and Iron Ages.

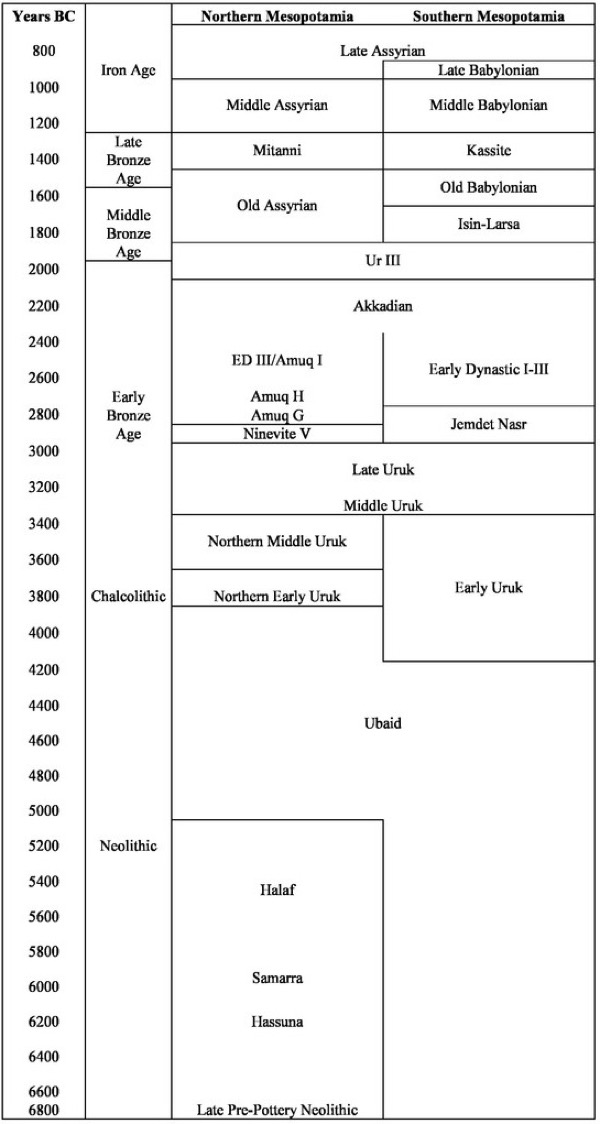

It is worthwhile keeping in mind some of the 'periods' that make up Mesopotamian history:

Natufian Culture (ca. 13,000-7500 BC)

Neolithic "New Stone Age" (ca. 10,000-4500 BC)

Pre-Pottery Neolithic (somewhere between 10,000-5500 BC)

Pre-Pottery Neolithic A (ca. 9500-8000 BC)

Pre-Pottery Neolithic B (ca. 7600-6000 BC)

Pottery Neolithic (ca. 7000-5500 BC)

Hassunah Period with Hassuna Culture ca. 6900-6500 BC and Samarra Culture ca. 7000-4800 BC

Halaf Period (ca. 6500-5100 BC)

Ubaid Period (ca. 6200-4000 BC)

Chalcolithic "Copper Age" (ca. 5500-3000 BC)

Warka and Proto-Literate Periods (Uruk Period ca. 4100-3000 BC)

Gaura and Ninevite Periods (Tepe Gawra ca. 5000-1500 BC, Nineveh ca. 6000-612 BC)

Jemdet Nasr Period (ca. 3100-2900 BC)

Bronze Age (ca. 3300-1200 BC)

Early Bronze Age with Early Dynastic Period (ca. 2900-2350 BC), Akkadian Empire (ca. 2350-2150 BC), Third Dynasty of Ur (2112-2004 BC) and the Early Assyrian Kingdom (ca. 2600-2025 BC)

Middle Bronze Age with Early Babylonia (ca. 1900-1800 BC), First Babylonian Dynasty (ca. 1830-1531 BC), Empire of Hammurabi (ca. 1810-1750 BC) and Minoan eruption (c. 1620 BC)

Late Bronze Age with Old Assyrian period (2025-1378 BC), Middle Assyrian Period (c. 1392–934 BC), Kassites in Babylon, (c. 1595–1155 BC) and Late Bronze Age collapse (ca. 1200-1150 BC)

Iron Age with Syro-Hittite States (ca. 1180-700 BC), Neo-Assyrian Empire (911-609 BC) and Neo-Babylonian Empire (626-539 BC)

As you can guess from the above list, establishing a definitive stratigraphy on the way artefacts are 'layered' and dated on a particular site and how the context between layers is interpreted has always been an open issue in archaeology. In addition some terms such a Sumerian, Sumero-Akkadian, Assyro-Babylonian, etc. are often not precise in terms of geographic coverage or chronology (even spellings are different). Lists of time periods are notorious for all being different in different texts, and l have simply used a range of dates given on a variety of different sources. Individual authors are often very coherent in their definitions and chronology, but as I have done, when you move across multiple publications and websites you immediately realise that each uses a slightly different chronology to contextualise their research. The key here is that we are trying to discover features of Mesopotamian science and mathematics, not create a coherent description of the history of one of the world's earliest civilisations.

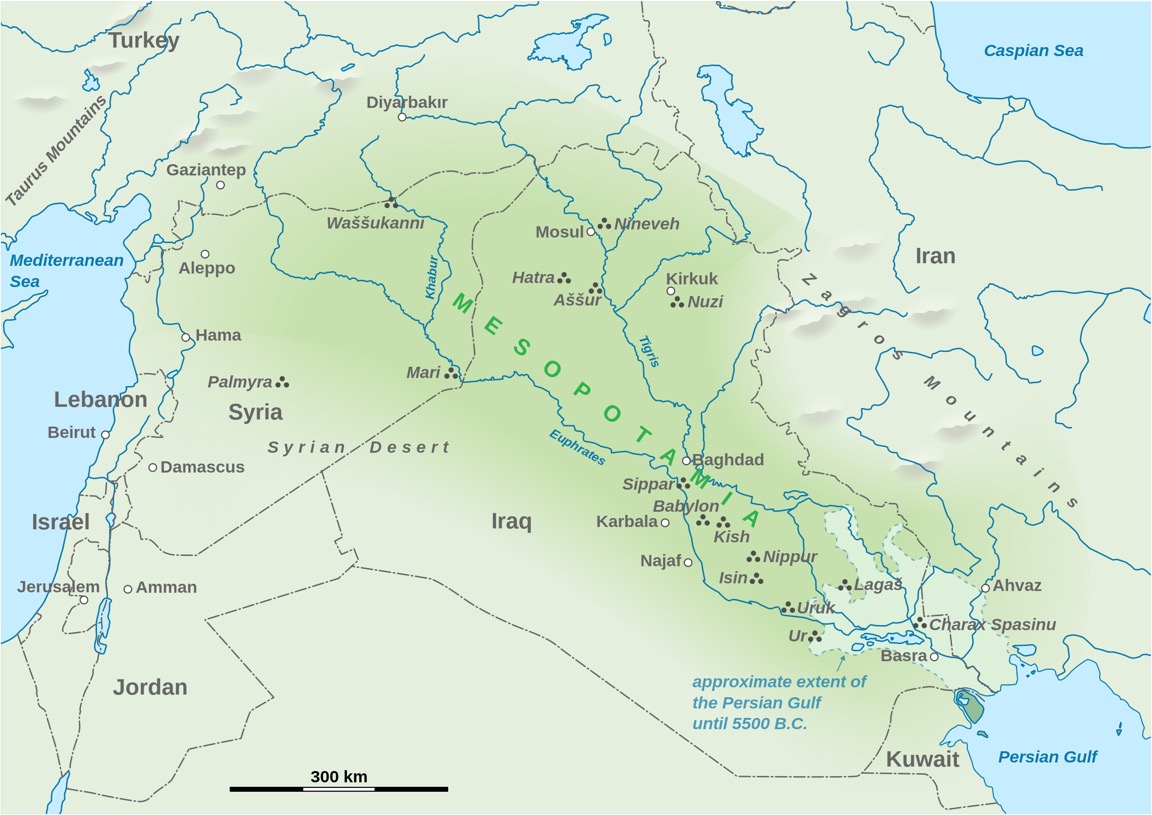

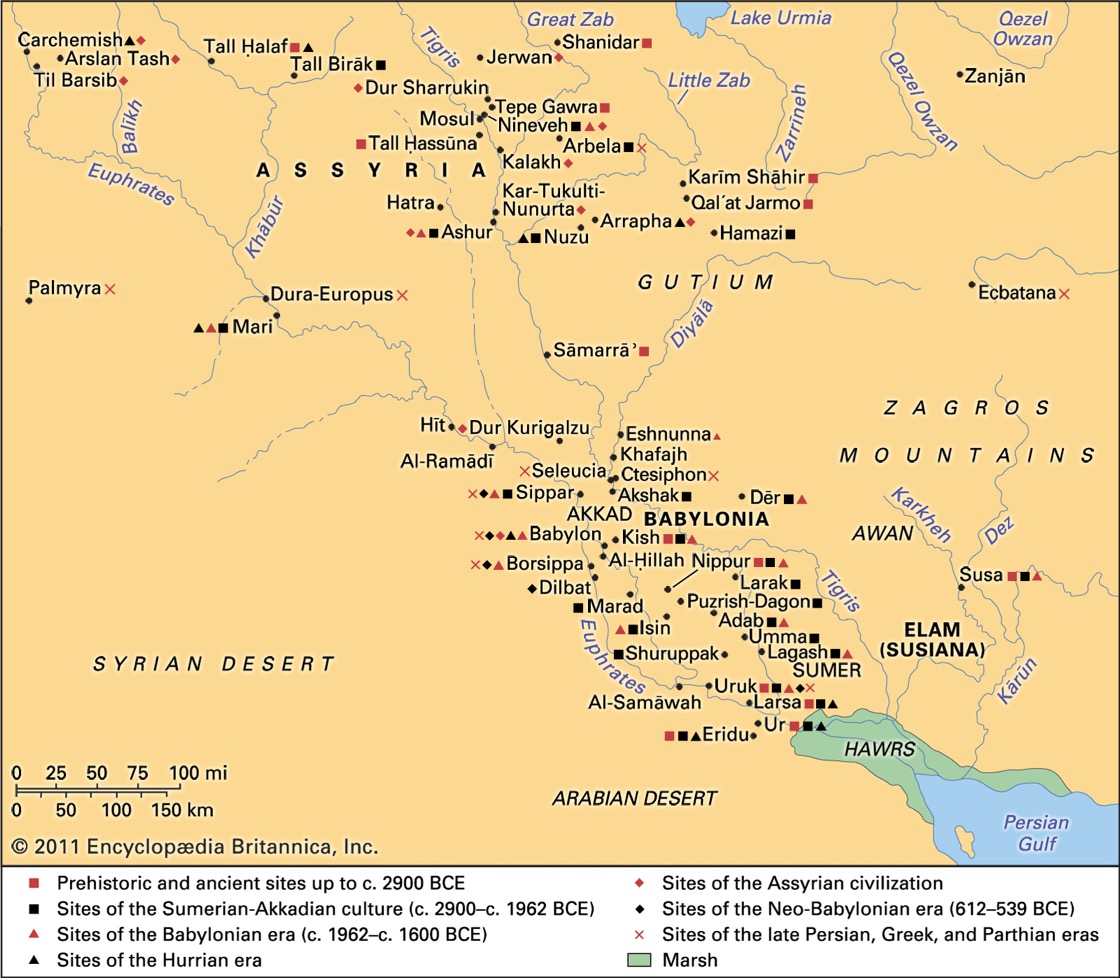

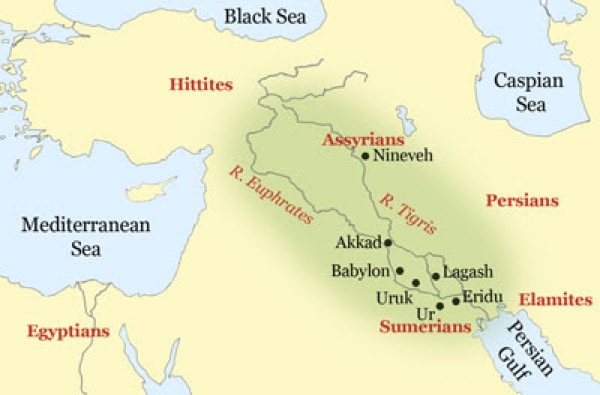

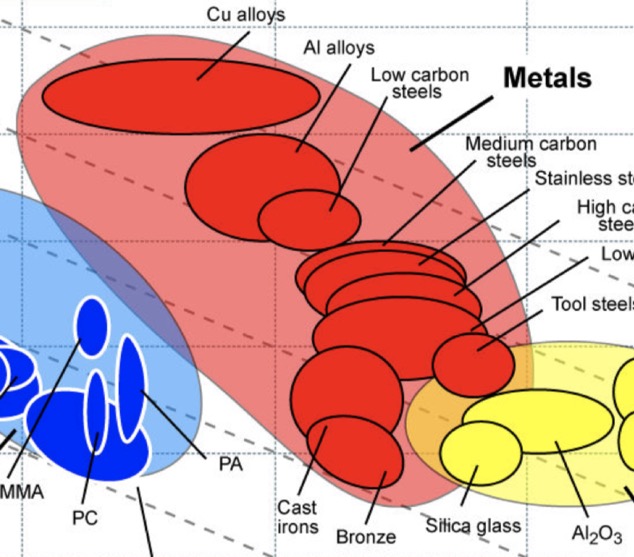

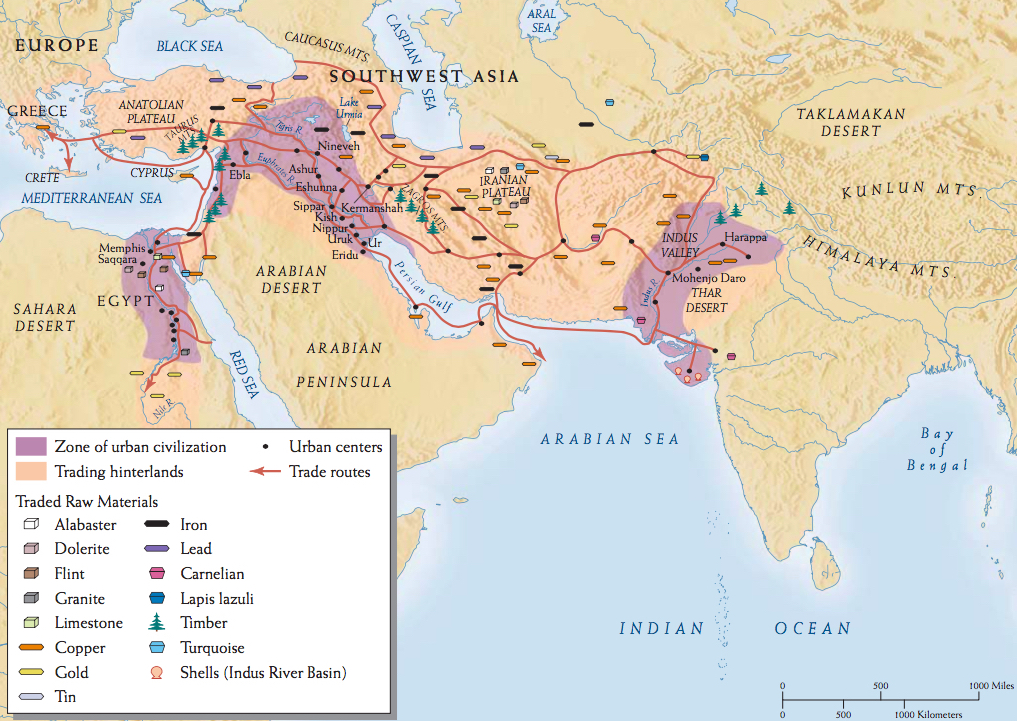

Below we have a simple map of the region indicating both present-day locations and some of the ancient sites that are mentioned in these three webpages.

The difference between North and South Mesopotamia

It is perhaps important here also to revisit the difference between the North (Upper) and South (Lower) Mesopotamia. On the first two webpages on Mesopotamia I did not really spend much time on looking at North-South issues, probably because it was not really an important topic until vast urban areas started to emerge in South Mesopotamia with the city-state of Uruk (from ca. 2900 BC).

Upper Mesopotamia was the area with the earliest signs of agriculture and the domestication of animals. It is this area that is often associated with Pre-Pottery Neolithic A, and it was an area continuously occupied from the time of the hunter-gatherer, ca. 9000 BC. Domestication of sheep and goats came ca. 7600 BC with the Pre-Pottery Neolithic B, and weaving and pottery came with the Halef culture (ca. 6100-5100 BC). From ca. 2500 BC Upper Mesopotamia was the heartland of ancient Assyria (through to 612 BC).

Here are a few of the most important Upper Mesopotamian sites:

Tell Sabi Abyad (ca. 7500-5500 BC) is in the Balikh River Valley almost the boarder between Syria and Turkey. Home to the earliest pottery in Syria (ca. 6900-6800 BC) and being mass produced in ca. 6700 BC. The site has revealed the largest collection of clay tokens and seals yet found at any site.

Tell Halula (ca. 7750-6780 BC) is also very near the Syrian-Turkish boarder. Forty levels of occupation are recorded, and is possible the home of the oldest paintings of people in the Middle East. Was also home to a long initial stage of pottery production, pre-Halaf (pre-6100 BC).

Tell Qaramel (occupied from ca. 15,000 BC) is just north of modern-day Aleppo and again very near the Syrian-Turkish boarder. Home to the first evidence of a permanent stone-built settlement. Had massive walls 2 millennium before the stone tower of Jericho. A large polished copper nugget dating from ca. 10,464-9246 BC is one of the earliest finds of metal. Early remains had their heads removed, indicating a 'head cult'.

Shir (ca. 7000-6100 BC) I think is in Hama Province about 50km from the Mediterranean. Home to some of the earliest ceramic finds in the Near East. Famous for its massive, carefully created lime plaster floors. Given that it was abandoned ca. 6100 BC it provides a unique insight into Neolithic village life untouched by future cultures.

Nineveh is very near Mosul in modern-day northern Iraq. The area was settled in ca. 6000 BC and for a period of about 50 years it was the worlds largest city before being sacked in 612 BC.

Tell Hassuna is not far from Nineveh and to the west of Mosul in modern-day Iraq (occupied ca. 6000 BC). This is the so-called 'type site' for the Neolithic Hassuna Culture.

Jarmo (ca. 7090-4950 BC) in the northern-eastern part of Iraq, in the foothills of Zagros Mountains. One of the oldest sites where pottery has been found.

Here are a few of the most important Lower Mesopotamian sites:

Uruk (ca. 4000 BC to ca. 700 AD) In ca. 2900 BC this city was the largest in the world, and as such saw the emergence of urban life. 'Type site' for the Uruk Period (ca. 4000-3100 BC), which was a transitional period between the Chalcolithic period and the Early Bronze Age. Uruk was possibly the home of writing, of the cylinder seal, and of the first example of architectural work in stone.

Babylon (ca. 2800 BC to ca, 1000 AD) is just south of Bagdad in Iraq, and was for a time capital of southern Mesopotamia. Between ca. 1770 BC and ca. 1670 BC it was the largest city in the world (ca. 290,000 people).

Ur (ca. 3800-500 BC) was a Sumerian city-state, located in the Dhi Qar region in present-day Iraq. It was one of the most important trading cities in southern Mesopotamia.

Kish (ca. 3100-150 BC) was a Sumerian city, located south of Bagdad, in Iraq. It is said to have been the first city to have had Kings after the deluge.

It is easy to get confused between the different Mesopotamian cultures/periods that emerged from both the North and South, how they overlapped and mixed. The below chart tries to clarify things (dates may vary from one source to another).

Upper Mesopotamia developed a rain-fed agriculture, whereas the South had to develop irrigation. It is in the South that we see first the mobilisation of labour for the construction and maintenance of canals, the development of urban settlements, and a centralised system of political authority. Tell al-‘Ubaid in the South, and only 250 km from the Persian Gulf, gave its name to the Ubaid period (ca. 6500-3800 BC). It produced a specific type of pottery that was responsible for the gradual change in the pottery found in the northern Halaf Culture, thus the mention of the Halaf-Ubaid Transitional Period (ca. 5600-5000 BC). The Ubaid period was followed in the South by the Uruk period (ca. 4100-2900 BC), which was named after the Sumerian city of Uruk. Sumer (perhaps as early as ca. 5500 BC) was the first ancient urban civilisation in southern Mesopotamia. It is with the Sumerians that we have the first examples of proto-writing, ca. 3500 BC, but they may themselves have evolved from the Samarra culture (ca. 5500-4800 BC) of northern Mesopotamia. The Uruk period of Sumerian civilisation was followed by the so-called Early Dynastic Period (ca. 2900-2500 BC) and the First Dynasty of Lagash (ca. 2500-2270 BC), which annexed Kish, Uruk, Ur and Larsa. It was Sargon of Akkad (ca. 2334-2279 BC), emperor of the Akkadian Empire (ca. 2334-2154 BC), who conquered the Sumerian city-states.

Not explicitly mentioned so far, but Mesopotamia (and Anatolia, Near East, Middle East, or Fertile Crescent) was key to the domestication of both plants and animals. Here we will just list for reference what was domesticated in the region, and when. The dog (i.e. wolf) was certainly domesticated in multiple places ca. 13,000 BC (but some experts go a far back as 33,000 BC). Domesticated lentils were being eaten ca. 11,000 BC in Middle East, and goat ca. 10,000 BC in the Near East. Then ca. 9000 BC we have the domestication of the fig tree, emmer wheat, flax, peas, and sheep in both Anatolia and the Zagros mountains. The einkorn wheat and barley were domesticated ca. 8500 BC in the Fertile Crescent, and the Auroch (i.e. cattle) in Middle East ca. 8000 BC. Duram wheat was domesticated ca. 7000 BC and the pistachio was a common food product ca. 6750 BC. Sometime between ca. 6000-4000 BC the watermelon, olives, and grapes were domesticated, and ca. 3500 BC the pomegranate and hemp were domesticated. Later came oats, the quince, chestnuts, rye, and lime. Checkout “Evolution, consequences and future of plant and animal domestication”, Domestication, and the List of domesticate animals and the List of domesticated plants.

North versus South

As we have said, the southern Ubaid period influenced the northern Halaf culture, but the North remained largely egalitarian with small communities without centralised leadership, and with few signs of status markings. Both house forms (open courtyards and large domed ovens) and styles of painted pottery spread from the South. But both the North and South more or less evolved simultaneously a desire for monumental architecture, for long-distant trade, and for specialised craft production. Both North and South went on to develop a liking for large-scale feasting, religious institutions and specialised temples, mass production of ceramics, urbanism, but also organised violence. Both also controlled property, as evidenced by the widespread use of clay or bone seals and stamp seals. These seals were placed on jars, containers, boxes, and even storerooms, suggesting that they were not just reserved for an elite or a centralised political authority.

There are signs both in the North and South of monumental buildings intended for manufacturing of craft items, e.g. with plastered basins and bins, pounders and grinding stones, tools, large ovens, and stocks of bitumen, marble, flint and obsidian. We know that obsidian as a raw material had a surprisingly complex trading pattern, as did the trade in pottery (and also with the later exchange of metal ingots and tools). This is not to say that there were not many localised exchange networks. For example the so-called “sprig ware” pottery vessels with a distinctive vegetal motif were made in the North, and were distributed quite extensively in the region prior to the arrival of people and ideas from southern Mesopotamia. And large settlements also existed in the North, even if most settlements remained as villages.

One administrative technology conspicuous by its absence in northern Mesopotamia was writing. The development of pictographic writing, later developed into the cuneiform script, has its origins in the urbanism of southern Mesopotamia.

Things did not always happen slowly and without violence, for example in Tell Brak (in the North-East) clusters of human skulls were found, and there was an almost complete absence of hand and foot bones (and no bodies of children). In addition, the pattern of animal remains suggests that feasting events were held on and over the dead bodies.

What is certain is that by ca. 3500 BC the North also had a few very large urban cities, e.g. at one point in time Tell al-Fakhar in the North was larger that Uruk in the South, and Tell Brak was almost a big. It is unclear if these were truly urban centres, since they encompassed much open space set between smaller settlement clusters, but it is clear that they did have all the indications of being economic, religious and political centres. These larger northern cities were in many ways city-states. One sure sign is that even ca. 4500 BC there was a systems of seals in place run by a centralised bureaucracy that redistributed rations to dependents. Chiefs or 'officials' (it all added up to the same thing) existed and 'Rule' was almost certainly administered by powerful households, or “households of the gods”.

This tells us that the reality was that the (southern) Uruk expansion to the North took time, and was largely driven by an asymmetric trade relationship. This asymmetry has been described as being due to the early use of irrigation in the South that increased crop yields and reduced the risk inherent in annual climatic fluctuations. Their rivers and large canals provided a simple means of transport for bulk items such as harvested cereals.

In the period ca. 3000-2600 BC the Uruk colonies vanished in the North, along with almost all traces of interaction with southern Mesopotamia. Trade appears to have dried up, and ceramic traditions gave way to regional assemblages. There are signs that administrative technologies such as tokens and sealed bullae almost disappeared, and there are few signs of writing being used in the North, although cylinder seals continued to be used. Pottery was mass produced through to ca. 3000 BC, and then labour-intensive surface decoration was introduced. Southern Mesopotamia retained large temples, usually run by important economic households, whereas the North reverted to small, single-room structures with some benches and a small podium. Residential buildings in the North became smaller, with fewer ornamentations. A central round building appeared containing vaulted storage rooms, brick platforms, and industrial scale ovens (a sign that in the North they reverted back to chiefdoms). But there are also clear signs that some villages were specialised centres for storage, processing and distribution of grain products within a regional economic system. There was also a specialised animal economy focused on sheep and goat production.

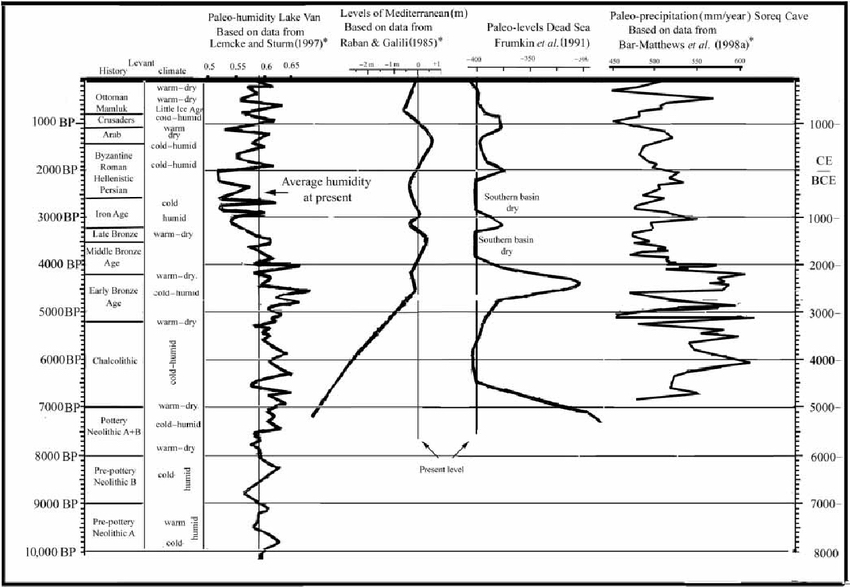

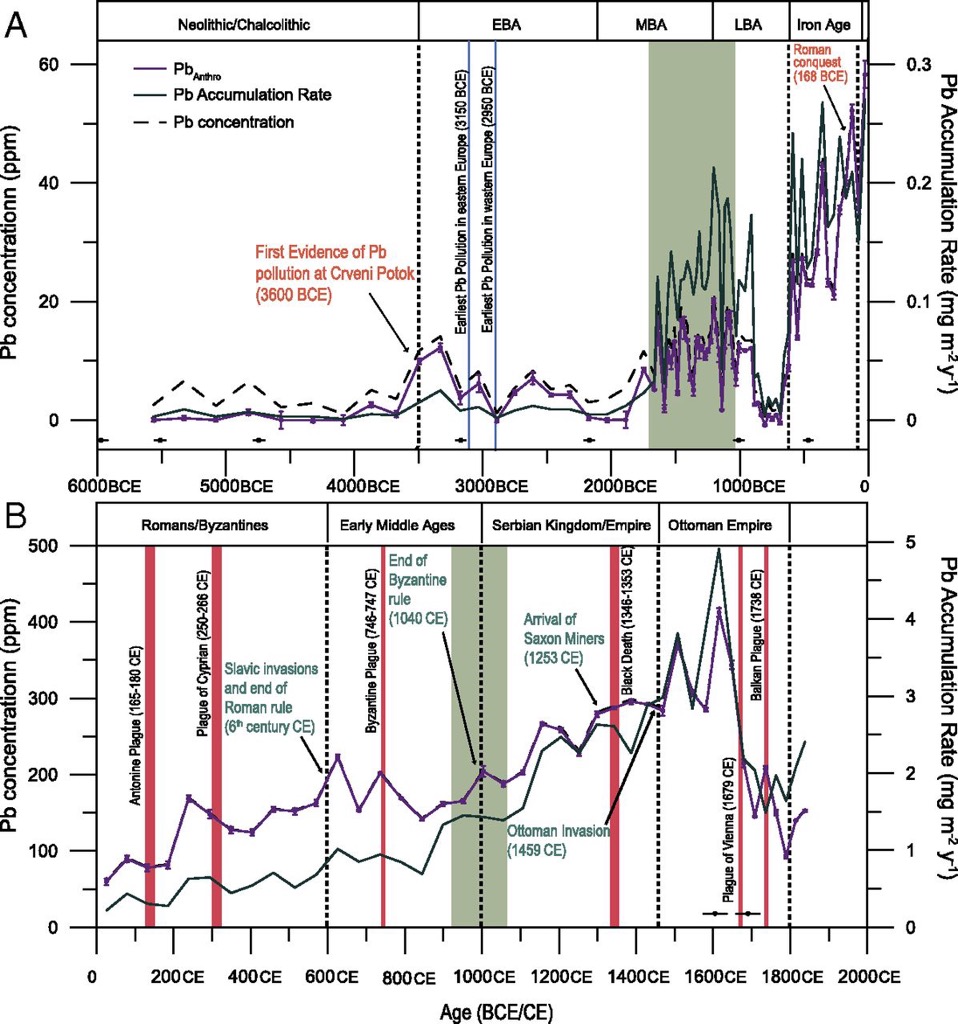

One telling measure is outlines in the above graph on the so-called 'paleo-hydrology' of the Middle East. It shows the humidity levels in Lake Van, the largest lake in Turkey (and one that is influenced by the Mediterranean climate). This endorheic lake is a saline soda lake. The Dead Sea is a salt lake that actually sits 430 m below sea level. The Soreq Cave is in Israel which has been used to reconstruct the region's climate for the past 185,000 years.

Lake Van has provided invaluable information on the pollen count and the 18O/16O ratio over time. This information suggests that around 9,000 to 10,000 years ago the weather changed to being warmer and dryer, and it would appear that near the end of the 3rd millennium BC it continued to warm-up (before turning cold). The average annual precipitation declined, with a minimum at ca. 2000 BC. This appears to be linked to the decline of northern cities and the move (back) to a nomadic and semi-nomadic society ca. 1800 BC. This move is often called 'pastoral nomadism' to describe a mobility based upon the seasonal availability of pasture and water for animal husbandry.

In the period ca. 2600-2000 BC the North went through a period of rapid re-urbanisation. They suddenly got into building massive city walls, terraces, palaces and temples. Specialisation in the production of pottery, metals and other crafts increased, along with agricultural and pastoral production. The earliest cuneiform tablets are found in Tell Beydar and Tell Brak. Some Tells grew by nearly a factor of 10 over 100 years, and major cities rapidly emerged such as Tell Taya. This particular Tell had wide streets radiating outwards from a central core towards gates in the outer city wall.

Did North Mesopotamia develop independently of the South?

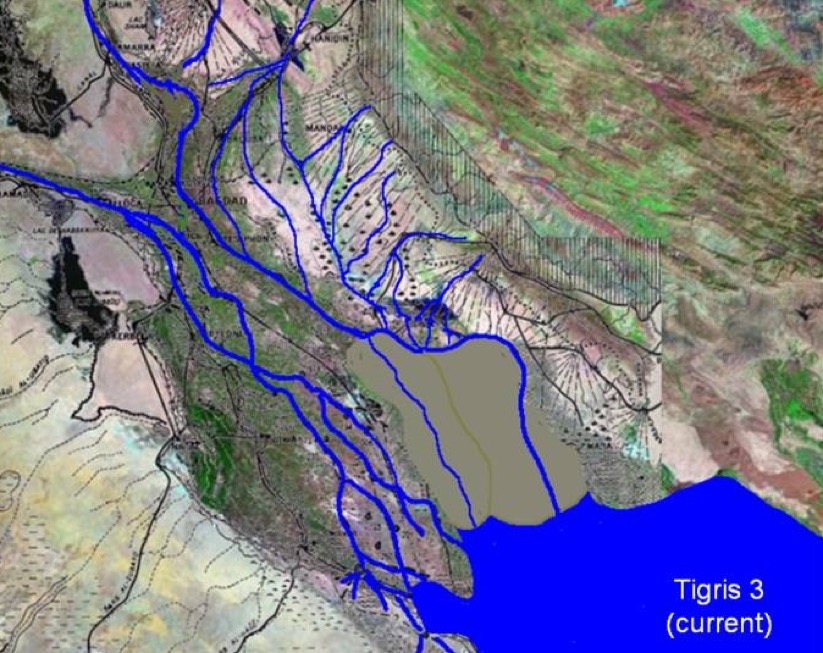

The above description is what might be called the 'classical' view of the South as being the 'heartland of cities', however there is a different view on how North-South Mesopotamia evolved over time. The way the Tigris and Euphrates Rivers became highly 'braided' as they entered the Persian Gulf created an environment ready for irrigation and lacustrine plains. The 'classical story' tells us that this created a unique habitat ready for the emergence of the first cities and as a result some of the worlds earliest 'great powers', e.g. Sumerian (ca. 4500-1900 BC), Akkadian (ca. 2334-2154 BC) and Babylonian (ca. 1895-539 BC). The story goes on to say that North Mesopotamia developed through contact with the South.

But now many experts think that the North evolved independently as a major cultural complex. 'Tells', the early Mesopotamian cities, were just level upon level of mud-brick and pisé constructions, making them difficult to 'reconstruct' and analyse. Expert knowledge actually often comes from the study of smaller farming settlements that are easier to 'unfold' but don't provide the same depth of information on wider social and economic activities. We mentioned Tell Brak in the North, and the evidence now suggests that it was a major settlement well before the emergence of southern city-states such as Uruk. In fact Tell Brak was nicely situated on a major route from the metal-rich Anatolia to the Tigris Valley, at a river crossing and near rich agricultural land suitable for nomadic pastoralism.

Other northern sites such as Hamoukar and Tepe Gawra also present evidence of monumental buildings, industrial workshops and the making of prestige goods dating back to the ca. 5000 BC.

Tell Brak was already known as an important 3rd-millennium site covering 40 hectares and rising to a hight of over 40 m. What happens during an excavation is that layer by layer are removed top-down revealing earlier and earlier occupation of the site. The top layer shows occupancy by Romans and early Islamic tribes, possible sitting next to or on top of a late Assyrian occupancy. Below that it would appear that the Tell was occupied ca. 1950 BC by tribal Amorites (who had already occupied large parts of southern Mesopotamia including Babylon). This is seen as a transitional period between the early and middle Bronze ages. The presence of Amorites is identified though the presence of Khabur pottery, but they occupied the Tell for a limited period and only occupied one part of it. Later, but in many ways seen as alongside the Amorite occupation, the Mitanni (ca. 1500-1300 BC) occupied the site. They built a settlement suburb and a palace and adjacent temple. Before that the site was occupied by Assyrians. Going back further in time Tell Brak was still a large urban complex ca. 3000 BC but centred around the original Tell (or mound). Evidence was found indicating Akkadian (thus southern) control of the site. Houses, a large public building, an audience hall and temple as well as administrative and 'industrial' areas were found. Cuneiform tablets and bullae (clay seals) confirmed their presence. There is also evidence of Hurrian occupation possibly during the period ca. 2300-2000 BC, the Mitanni was a late Bronze kingdom of the Hurrians and as we have said they occupied the Tell sometime later. Signs would indicate that Tell Brak was more or less permanently occupied during the third-millennium BC despite the fact that many other Tells were abandoned.

Recent excavations have yielded finds now dating back to the mid-5th millennium BC (late Copper Age) showing that there were already monumental buildings on the Tell during the Ubaid Period. The evidence is clear that the buildings were there before the arrival of a Uruk colony from South Mesopotamia (it was at this time that a large 'old town' was built outside the walls of the Tell itself).

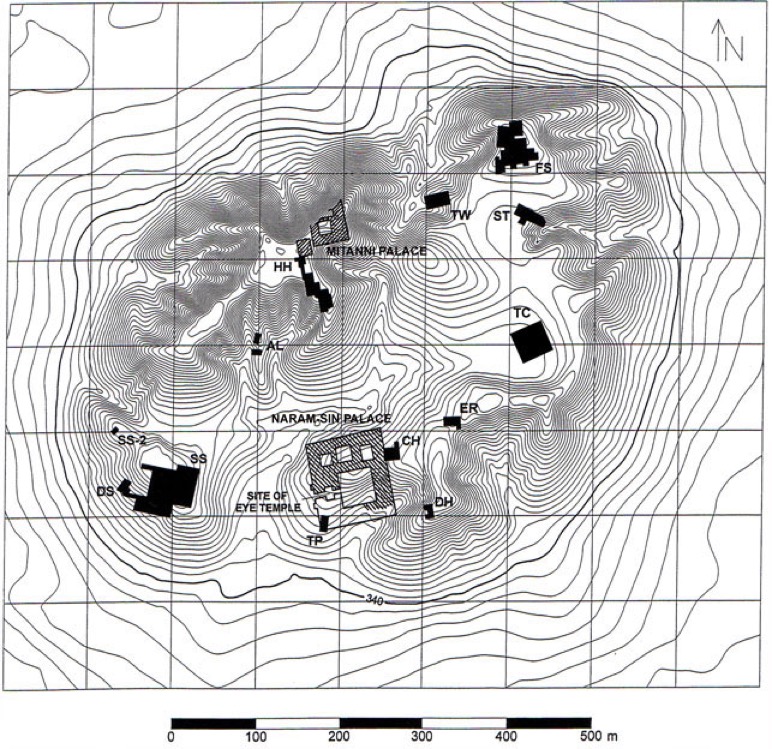

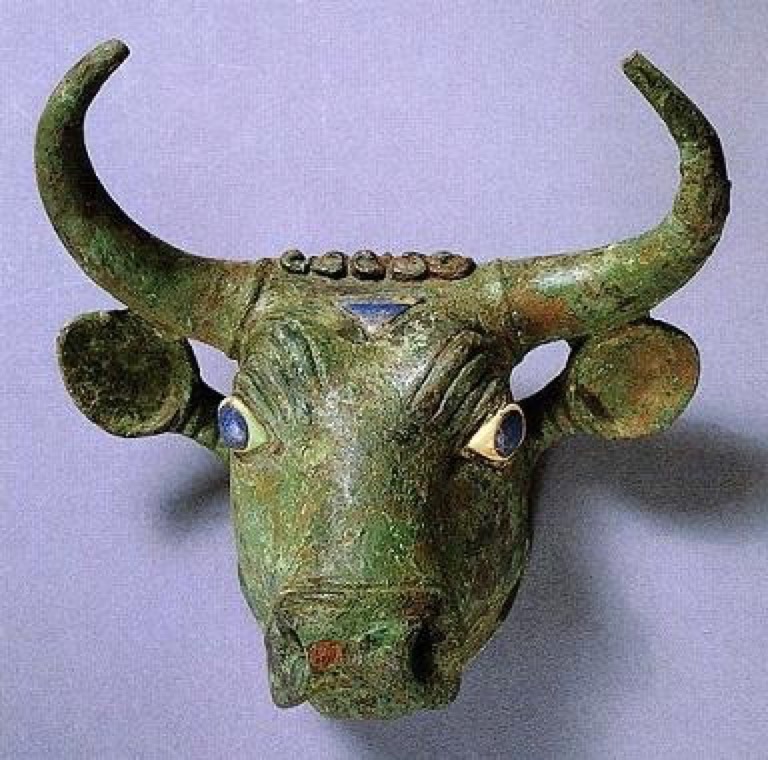

The most notable 'find' was the so-called 'Eye Temple' which confirms that Tell Brak was a large religious and economic complex ca. 3800 BC, i.e. before the expansion of southern Uruk into the North ca. 3600-3200 BC. Above we have a few of the thousands of 'eye idols' found there.

However there is also plenty of evidence that some monumental structures date from the late-5th millennium and early-4th millennium. These were associated with organised craft activities, the manufacturing of prestige goods, and the organisation and provisioning beyond household levels. The Tell was some 40 m high, and these 5th-4th millennium excavations are 11 m under the surface of the Tell.

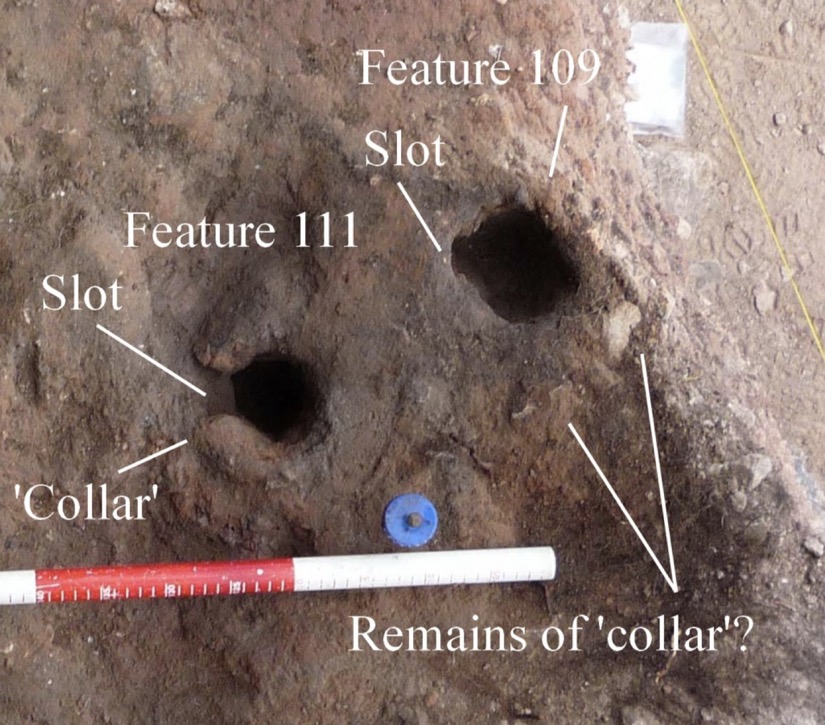

Experts talk of monumental structures, but what does that really mean? Let's take an example, in one corner of the Tell near the north entrance they found an important building consisting of a massive entrance with towers on either side. The doorsill is a single massive piece of basalt, 1.85 x by 1.52 m and 29 cm thick, but basalt is not native to the region near the Tell. The mud-brick walls were 1.85 m high. In the excavation, so far the building appears to have consisted of two empty rooms. The walls were not set in foundations but the whole building was set on a platform of large cobbles and red clay 80 cm deep. On one side of the building there was an open area covered in white (waterproofed) lime plaster, with a wooden flooring at the entrance to the area. The area had been replastered at least three times and the main building rebuilt at least once. Additional smaller empty rooms were outside the main entrance (shops? storage? offices?). No one knows what this building was used for, except that it does not look like any known religious building. This building has been dated to the late-5th millennium BC.

Another building, more modest in size, was home to basalt pounders, grinding stones, clay spindle whorls, polished stone palettes, delicate obsidian blades, neatly ground obsidian discs, large quantities of mother-of-pearl inlay cut from local mollusc shells, and unusually long flint blades. Along side all this there were large ovens and plastered basins and bins. And there were also a large number of clay seal impressions, including door seals indicating 'official' locking.

In addition there was a building with ovens for pottery, including for new designs such as large open bowls and small mass produced bowls. Elsewhere there were piles of raw flint and obsidian, lots of jasper, and marble and serpentine used for beads. A large amount of bitumen was stored, as well as clay spindle whorls, and lots of sheep and goat remains. Most of this material would have been collected and transported some distance to the Tell.

Above we have the most extraordinary find, a 16 cm tall obsidian and white marble 'chalice' dating to ca. 4000 BC. The obsidian core has been ground out to make a drinking vessel. The two parts are held together with bitumen, and the upper rim would have probably had a gold or silver insert. This kind of vessel is clearly a prestige item and shouts 'social stratification'. It was found in the same room as a lion stamp seal which in later periods would be considered a 'kingship' artefact. we have to remember that these items were not found together by chance, they were excavated and found at the same level, and thus presumed to be related to the same period. Ownership seals have been identified in central and northern Mesopotamia as early as ca. 7000 BC, and were common practice in northern late Ubaid period sites (ca. 4800-4500 BC). The use of this type of seal clearly would have signified ownership or control, a major facet of South Mesopotamian administration where ownership seals were linked with 'numerical tablets' and pictographic script (Uruk ca. 3400 BC). At Tell Brak there are also a few examples of the use of two different seals on the same item, suggesting two different but equal officials. So we have organisational complexity on an 'industrial' scale site in the North at the same time as in the South we would have found a residential-based 'cottage-industry'.

As we have already mentioned this is an excavated site. And above what we have described sat a later 'ritual' building (but not a temple) with a courtyard containing a number of ovens used for large-scale cooking of meat served on mass-produced plates (judging by the serving pottery). This might have been a 'feasting hall', but given that it was near a gate it could also have been a 'travel lodge'. Everything suggests large-scale patterns of production and consumption, and thus large units of labour. And thus the cultivation of land sufficient to support non-agricultural administrators and craftsmen. Monumental buildings required large amounts of straw and water for making mud-bricks, and this would have required considerable investment in time, materials and labour. The 'numerical tablets' found in Tell Brak reflect housekeeping of labour requirements and the control of manpower. On top of all that there is a nearby boundary wall marking the limit of the so-called 'palace area' that dates back to ca. 4400 BC suggesting that the area remained 'monumental' or 'sacred' for over 2,000 years (it includes the 'Eye Temple').

The area excavated on Tell Brak is about 30 hectares, but there was a suburban area of about 300 hectares built around the Tell. Some parts of this suburban area, all at least 300 m from the Tell, date also to the late-5th millennium BC.

For more information on the excavations and 'finds' at Tell Brak checkout this website, which also has a page dedicated to the example of 'early warfare' mentioned above.

What we have seen for Tell Brak kills the idea that the poor old upstream northern 'dry-land' farmers were only stimulated into economic activity and big building plans after contact with the southern Mesopotamian 'core'. It is true that Uruk (sometimes also known as Warka in the South) was the largest and greatest of early cities and the site yielded the earliest written documents (cuneiforms) and had the largest public buildings constructed in the late-4th millennium. But we don't know much about how Uruk evolved to become that mega-city, and experts have built their understanding through excavations on simpler small farming settlements. Whereas the excavations on Tell Brak clearly show a spatially significant northern 'industrial' Mesopotamian settlement with monumental buildings of the type not seen in the South. And to top it all Brak, Tepe Gawra and Hamoukar produced an almost identical pottery over an area of some 300 km (a distinctive form that has also been found in south-eastern Turkey).

So in Tell Brak there was a proto-urban development up to a 1000 years earlier than originally thought, and possibly earlier than developments in the South. This does not put in doubt that (judging from pottery shards) by ca. 3400-3200 BC Tell Brak was colonised by Uruk. Some experts have suggested that this colonisation was violent, others that it was largely driven through the creation of merchant colonies. And this does not mean that in the future we may not discover that southern Mesopotamian proto-urban development dates back before the Uruk Period. What Tell Brak has done is made it far more difficult for experts to define the term 'urban' and to know how to attribute it to sites in both the North and South. At best urbanism has become a more fuzzy concept, but as one expert pointed out if you were living in Uruk or Tell Brak you would certainly know that you were living in a place that was totally different to other, smaller city-towns in the region.

Stop and think

My initial objective with these three webpages on Mesopotamia was to create a very short history of mathematics and physics (natural sciences) as seen before the Greeks. On the first webpage I tried to cover the history of writing and numbers (including cuneiforms) and astronomy (including lists of omens). On the second webpage I tried to cover the “science of crafts” with cave painting, tool making (including stone tools), fire and pre-pottery technologies, weaving and textiles (ca. 60,000 BC), ceramic sculptures and pottery (starting ca. 29,000 BC), boats (ca. 10,000 BC), the wheel (ca. 6500 BC), and early patterns of trade and maps (ca, 12,000 BC).

With this third webpage I wanted to cover irrigation and agriculture, and the Copper, Bronze and Iron Ages with their engineering, metalwork and weapons. The question about 'what' I wanted to cover was easy, the 'how' is far more problematic.

When we kicked-off on our first webpage starting at ca. 10,000 BC the worlds population was somewhere between 4-10 million people, when we quit this third webpage on Mesopotamia the worlds population will have grown to 50-100 million. Like it or not Man had discovered specialisation and had understood how to exploit that through markets. And what did man specialise in? Whichever way you look at it Man specialised in technology. One view is that he went from mastering Stone, to Bronze and then to Iron (we are now in the Silicon Age). Another view is that Man moved from Hunter-Gatherer, through Agriculture, the Metal Age and then the Machine Age (we are now in the Information Age). Others look at energy as the key definer of human development with Muscle, Animal, Agriculture, Fossil, … Yet another view is to look at information with Genes, Language, Signs and Logic, Writing, …

Perhaps what is common to these different views is the role of 'tools'. Oldowan chopping tools, hand axes, blades, needles, twisted cord, the awl, fire (who could forget fire), … What we find usually is that people try to outline a chronology and then fill-in what fits, but I want to look at science, mathematics, engineering, and 'add a bit' to help understand the context and chronology of a discovery or invention.

Sea levels and irrigation

Despite the facts, many authoritative sources still promote the idea that complex urban-city-states first emerged in South Mesopotamia with the Uruk Period (from ca. 4000). No matter where the first state-level societies emerged, we still don't know why they emerged. Agriculture, bureaucracy, large-scale irrigation, centralised political and religious hierarchies, warfare, inter-regional trade, control of labour, or any combination of the above, could be responsible for the emergence of the first city-state.

We will look at these civilisations (North and South) based upon the idea that their development was certainly linked to so-called paleoenvironmental conditions. The basic idea is that the Tigris-Euphrates river system discharges into the Persian Gulf, and today covers more than 800,000 km2 (and is the 12th largest drainage basin in the world). Whichever way you look at it the key climatic determinant for human life in what was and still is a semi-arid region is water availability. The river system was and still is dominated by snowmelt hydrology, which makes it susceptible to climate change impact. For example virtually all the waters of the Euphrates are generated in Anatolia from rain and melting snow (the Tigris drains from southeast Anatolia but the Taurus mountains is still part of the so-called Alpide belt). The emergence of the Akkadian Empire (ca. 2334-2154 BC) has been linked with the productivity of the rain-fed agricultural lands in the North and irrigation in the South. Drought has been used to explain the Late Bronze Age Collapse (ca. 1200-1150 BC) and there are strong indications that a decrease in rainfall produced a minima in the discharge of water into the Persian Gulf during the period ca. 1150-950 BC. This correlates with the decline of the Babylonian and Assyrian Empires in the period ca. 1200-900 BC.

In recent times the Tigris, Euphrates and Karun rivers flow along the length of the Mesopotamian lowlands into the Shatt-al-Arab estuary, a marsh area and deltic system at the head of the Persian Gulf. Initially (in the North) the rivers are deeply incised into the plateau, whereas in the South the slop is less than 1% and they meander through the floodplain. The Persian Gulf is very shallow (average depth of 35 m) and was well above sea level during glacial times (some freshwater lakes and shallow basins would have existed). With the reduction of the ice volume during ca. 12,500-8000 BC the sea level rose by about 75 m (about 32 m below present day levels). This would have still meant that much of the Gulf floor would have been exposed until ca. 6000 BC, but with a broad river valley and marshes and small lakes in the flatter areas. Sea levels continued to rise through to ca. 4000 BC, reaching about 2.5 m above present-day levels. No matter how you look at it the emergence of the southern Sumerian civilisation post ca. 4500 BC must have been substantially influenced by the marshland and lakes created in the estuary leading into the Persian Gulf. In fact experts think that marshlands would have extended in as far as the city of Ur (the site is now quite some distance inland). Some experts have even suggested that the ancestors of the Sumerians might have arrived through the Gulf floor and not from the North. This might provide a rational basis for their legends and gods that are often set against a background of rivers and marshes (e.g. Enki, Abgal,…, or even the Epic of Gilgamesh).

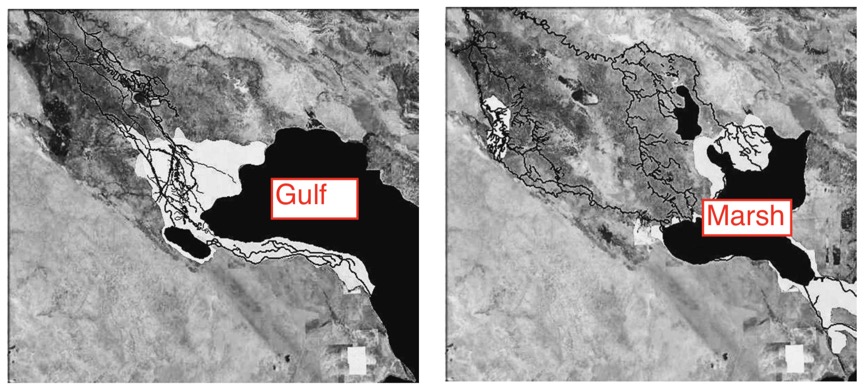

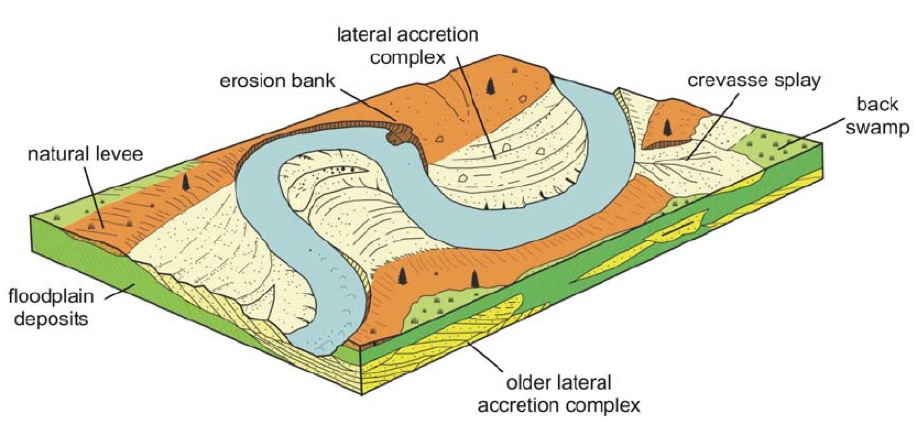

Let us for a moment step back in time. Viewed from a satellite flying over the region, Mesopotamia (particularly the South or Lower Mesopotamia) is like a trench sitting in front of the Zargos Mountains. It is partly occupied by the sea and partly by sediment brought down by the Tigris and Euphrates rivers. In addition at the 'mouth' there is material infilling from Wadi Batin in the south, and from the river flowing down from the Zargos. The Lower Mesopotamian Plain had (and still has) a very low gradient (about 1%) below Bagdad meaning that the river courses are unstable. They can frequency change because of sediment accumulation. All this has created a region of lakes and marshes. In the past the whole region has thus been determined by (a) rising sea levels up to a maximum ca. 4000 BC, (b) sediment accumulation, and (c) a pinching effect on the upper part of the delta. Below we have two images, the first is what the delta might have looked like around 3000 BC or 4000 BC, and the second is what the delta looks like today.

The idea that the city of Sumer and irrigation went hand-in-hand looked convincing. At the time the cities were still pre- or proto-literate, so cuneiform texts justifying this actually came from much later periods. In addition early excavations logged buildings and artefacts, not environmental evidence. Luckily winds periodically re-exposed long buried walls, artefacts, and irrigation canals, and allowed experts to discover a pattern of urbanisation (and irrigation) started more than 5,000 years ago. However this 'pattern' is a mix of surveys over limited areas, presumptions about where watercourses should be, aerial photography, hypothesised linear connections where no direct evidence is visible, and a strong bias towards elite sites previously excavated and where cuneiform texts have been found. Today experts have a much more nuanced view of how the South developed.

During the Neolithic Ubaid Period (ca. 6500-4500 BC) irrigation and urban development focussed on villages built on river levees (dykes) bordering swamps and marshes, and including (ca. 4800-4500 BC) an extensive canal network between major settlements. It is certain that innumerable smaller, scattered sites are still buried beneath the sands. What we see today are some Ubaid towns that remained inhabited into the Uruk Period (ca. 4000-3100 BC) and today remain 'exposed' because they are situated on rock 'turtlebacks'. Our understanding of the irrigation network is far less than partial. As an example, the main water supply into the city of Uruk (totally desiccated) was an importance waterway that could not have depended on the levees visible today. Another example is a simple line on an aerial photograph that was probably a substantial canal the size of a Tigris distributary. Between Uruk and Ur (ca. 40 km) there was a system of dykes that controlled the 'tidal flushing' that influenced cultivation regimes as far inland as Uruk, encouraging date-palms and levee garden crop production. In fact late Uruk seals and tablets depict palms and hunting scenes with pigs stalked among reeds. Proto-literate texts include dozens of ideograms for reeds, waterfowl, fish, dried fish, fish traps, and cattle and dairy products (and 58 terms for wild and domestic pigs). Dried fish was the principle food at the time, and the offices of 'fisheries governor' and 'fisheries accountant' endured 1,500 years.

We have to remember that initially Ur was a city-port of what is called the Eridu basin, which now is a patchwork of new sediments, old surfaces, and migrating dunes (even Lagash was once a city-port). At the time this area was probably less an intensely irrigated area and more a marshland used by wetland cattle-keepers who harvested thousands of tons of reeds and rushes for mat-weaving, fodder, fuel and construction needs.

The 'heartland' of the South was north of Uruk where the trend was to bigger settlements as the wetlands were dried for agriculture (experts think that most of these settlements are still buried). Canals were built facilitating boat traffic from one settlement to another. As the climate dried and the sea level dropped these canals were extended further south and east. The larger settlements certainly grew over time, but most did not have city walls and many were 'confined' by areas prone to seasonal inundations. Later (ca. 2600-2350 BC) the sea level rose again and many of the marshes were inundated with mollusks, marine fish and waterfowls. Texts found in the household of the wife of the ruler of Lagash (at the time a city-port) list a productive household of 1,200 people, including 100 fishermen and another 125 oarsmen, pilots, longshoremen and sailors. The economic activity of the household was fresh and salt-water fishing and the sale of fish and dried fish through merchants acting on behalf of the household.

The overall idea is that a few larger settlements had palm groves, gardens, temples, kilns, and sat on rock 'turtlenecks' protected from seasonal inundations by levees (we are still in the 3rd millennium BC). It was here that you would find intensive agricultural production, reed and other marsh products for urbanised consumption. Inundations were managed and exploited, but in most cases there were no signs of field irrigation.

We are still in the 3rd millennium BC but if we look to the north of Ur we find a series of watercourses interconnecting the Euphrates with the Tigris. However texts clearly still show the importance of marshland resources like reeds, fowl, pigs, etc. and they record bitumen, boats, mats, and standardised fish baskets.

Above we have on the left the Mesopotamian delta at the time of the city-state Ur (ca. 3000) and on the right the same river delta in 1972. It is worth noting that some of our ideas concerning Mesopotamia were created in the 19th C when marshes meant disease and that anyone 'sensible' (even 5,000 years ago) must have wanted to convert them into nice cultivated agricultural land. Marsh must be 'waste' and could not have economic potential. Irrigation and plow agriculture must be the only objective of a sensible administration! Today we see that marshlands can be economically viable and the destruction of wetlands can be a major economic and environmental disaster. What we can see now is the early Sumerian city-states were actually more like islands embedded in a marshy plain, situated in a vast deltaic marshland. Waterways were more for transport than irrigation and reeds were the key economic resource. Irrigation canals would come later, but the builders would enter into an infernal cycle - a constant need to invest in ever-more-extensive irrigation systems in order to emulate the natural wetland productivity they were replacing.

Irrigation and agriculture

As we move into the 2nd millennium BC and as the climate dried and became more seaonalised the Euphrates became an important source of irrigation water.

Before moving into the 2nd millennium BC let's just review what we know. There was a slow transition from hunter-gatherer to fully developed farming between the 11th and 9th millennia BC (Neolithic Period). This was most evident in the Levant (Jericho) and Anatolia (Çayönü). Domestic einkorn wheat, emmer wheat, hulled six-row barley, lentils, chickpeas and common vetch were the first crops domesticated. Domesticated flax for oil (linseed) and textile production appeared in the Balikh Valley in the mid-8th millennium BC. Agriculture was rain-fed and small-scale hoe farming. Tools were just stone sickles, cutters and vessels for harvesting and food processing. South Mesopotamia did not appear to play an important role at this time. For some experts Mesopotamian prehistory starts with the South Ubaid culture (ca. 6500-3800 BC), for others it started also in the North with the Halaf culture (ca. 6100-5100 BC).

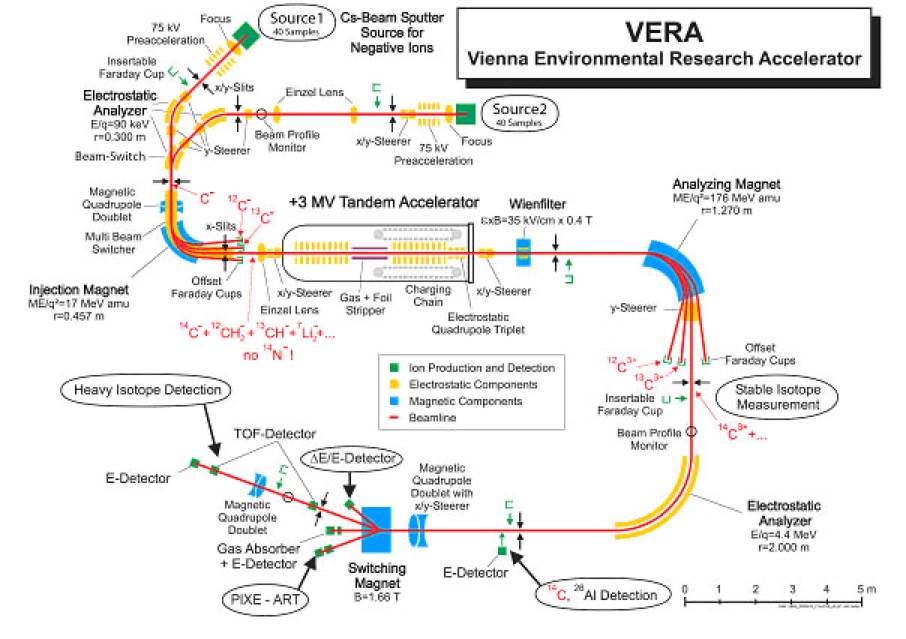

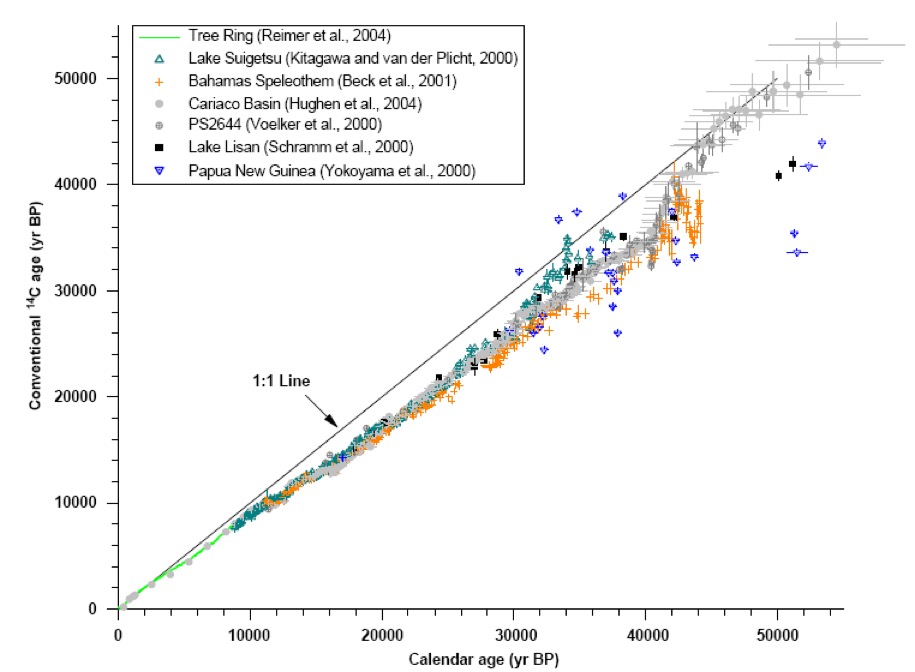

Initially everything focussed on how an 'urban revolution' emerged and how large-scale irrigation led to the "coercive control of populations by bureaucracies". Things changed when ceramics could be accurately dated using the Carbon-14 technique. Experts were now able to document long-term settlements from the prehistory to the present. The result was that they actually saw that irrigation developed from a small-scale basis that did not require state control. In fact in Lower (South) Mesopotamia the Euphrates and Tigris naturally irrigate by gravity flow. And we now know that the earliest proto-urban developments in the South were based upon a wetland providing a diversified economy of hunting, fishing, construction materials and animal fodder (cereal was not a major crop). Irrigated agriculture appeared in the 6th millennium BC in central Mesopotamia (not the South). It was here that domestic animals began to be used not just for their meat and carcasses, but also for their milk and labour (transport and plowing with the scratch plow). Irrigated cereal and date-palm gardens first appeared in the 5th millennium BC in Ubaid sites, even if today they are usually associated with later southern Mesopotamian cultures.

Then came the focus on urban societies in Uruk in southern Mesopotamia. Again this was defined as an 'urban revolution' driven by furrow irrigation in narrow long parallel strips bordering newly dug canals. Other experts underline the importance of the seeder plow, others of animal traction, yet others of the threshing sledge pulled by a donkey, and finally other experts prefer to stress the most striking of ceramic artefacts, the low-cost high-fired clay sickle. And not forgetting the idea that the emergence of temples provided the ideological coercion that was needed to make villagers provide the work needed to dig and maintain large-scale irrigation networks. Productivity gains were enormous but it was the temples that benefited.

This 'Sumerian miracle' has been challenged by finds in North Mesopotamia which showed that a proto-urbanism developed earlier in the North, and that clay sickles and flint blades appeared in both the North and South.

The analysis of written texts from the late-4th millennium BC added to the debate. One of the most important 'wisdom texts' outlined what work was needed during the agricultural year - "Annual flooding in the spring, hoeing and plowing to prepare the fields, harrowing, sowing, maintaining the furrows, irrigating (three or four times), harvesting, threshing, winnowing, and finally storing the harvest". Other scholarly compositions offer glimpses of agrarian practices. One tablet listed Sumerian terms on cereal and date-palm cultivation together with their Akkadian translations. Even law codes, such as the Code of Hammurabi, contained provisions on agricultural matters, such as leasing and cultivation of fields, maintenance of irrigation devices, grazing of sheep on fields, palm-groves, the renting of animals for agriculture, and the stealing of agricultural devices. Given the southerly geographical origin of the Sumero-Akkadian scholarly tradition, this exceptional documentary situation is, however, limited to irrigation agriculture and ignores the traditions of dry farming in the North. In this region (and, in many respects, in the South as well), everyday records, such as letters and, more importantly, economic texts recording the expenditures and deliveries of agricultural goods, offer the most complete evidence for the evolution of farming in Mesopotamia between the 3rd and 1st millennia BC.

The earliest documents (Jemdet Nasr Period, ca 3100-2900 BC) attest the existence of big estates with hundreds of dependents engaged in large-scale irrigation farming of barley, emmer, and probably date-palm. Other tablets mention a tripartite system of land tenure that would last until the mid-2nd millennium BC. Part of the estate’s land was directly cultivated for 'official' needs, while the rest was allocated to dependents as subsistence fields, and some parcels were leased to farmers against a fixed revenue. The Early Dynastic Period (ca. 2900-2350 BC) witnessed the appearance of the seeder plow. By adding a funnel to the ard at the time of sowing, Sumerian farmers were able to drop cereal grains at regular intervals directly into the furrow, minimising seed loss and increasing productivity. It was operated by oxen or donkeys, and the sowing season expanded over several months, from late summer to early winter. Fallow rotation may have been applied. Harvest occurred in spring and was performed with a saw-like tool. Copper sickles appeared in the archaeological record in the early 3rd millennium BC and are first attested in cuneiform texts from the Sargonic Period (ca. 2300 BC). In Ur (ca. 2100 BC), they became the standard harvesting tools. Sumerian fields were large (44-46 hectares), divided into furrows of various lengths, and separated by strip-shaped zones of overgrowth protecting the soil from wind erosion. In the marginal areas grew spices such as coriander and caraway. Field crops were harvested three times a year and included flax, legumes and vegetables, especially garlic and onions, of which a dozen varieties were known.

In Upper (North) Mesopotamia, the beginning of the Early Bronze Age (ca. 3100 BC) saw the development of a material culture independent from southern traditions. This corresponds to a decline in urban life and a process of ruralisation in small centres and hamlets. Located in the dry-farming zone, they relied on rain-fed cereal farming at a time when the climate was relatively wet. The increasing presence of caprids (goat-antelope) in faunal remains and the decrease of wild species indicate specialised pastoralism in the steppe, probably linked to textile production. Mari (ca. 2500 BC) probably relied on irrigation for its subsistence, while the plains near the Khabur Triangle saw the emergence of massive cities centred on a dry-farming hinterland. Analyses of satellite images show radiating roads used by farmers for traveling to their fields, while land surveys reveal traces of manuring on the fields. Tell Beydar (ancient Nabada) yielded the earliest written evidence for agrarian management in the Upper Mesopotamia (ca. 2400 BC), describing a large estate engaged in dry cereal farming and supervising sheep and goat herding.

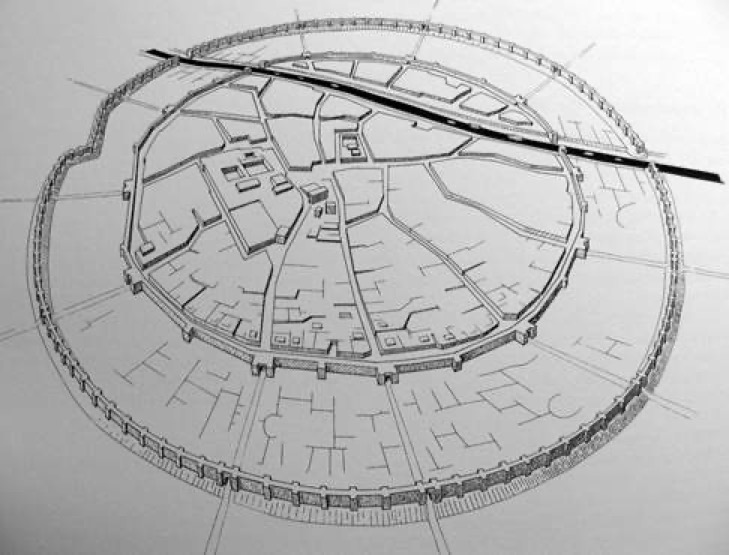

We will spend a little time looking in more detail at the city-state of Mari (its nice to look at one of the lesser known sites). This city flourished as a purpose-built trading centre (ca. 2900-1759 BC), placing it in the Early and Middle Bronze Age, and in fact it was a copper and bronze smelting centre. As a purpose-built city it was one of the earliest known planned cities. Originally the city was 4-6 km from the Euphrates, but today the river has washed away a substantial part of the old city. It is said to have once been connected to the Euphrates by a linking canal for domestic water, and it was this linking canal that eventually became the new route for the river. The Euphrates was navigable and ships could actually use the canal for transporting finished goods. They also built a 16 km long (100 m wide) irrigation canal and another 126 km long straight navigation canal which allowed boats to bypass the winding Euphrates (and on which Mari collected tolls).

The city was designed prior to construction, and consisted of two concentric rings, the outer as a protection against flooding, and the inner a defensive wall. All the streets sloped and included a complex drainage system, important since the buildings were made of mudbrick. When the city was discovered in 1933 it immediate yielded over 15,000 tablets, most dating from the last 50 years of the cities existence. The intriguing detail is that archaeologist are not sure who actually build the city, they are simply defined as an "unidentified, but well-organised, complex society". During the cities 1,200 year existence, it saw 3 phases (I, II, III). During Mari I there were no religious or palatial structures, but many houses and craft shops producing a wide variety of goods (beyond metalwork). Then it would appear that the city was abandoned in ca. 2650 BC, only to be re-inhabited in ca. 2550 BC. These new inhabitants levelled the old city and built a new one (Mari II). It is thought that this city controlled a vast area in North and Middle Mesopotamia. Mari II was destroyed by Naram-Sin (ca. 2254-2218 BC), grandson of Sargon, as he expanded the Akkadian Empire. He razed the city and walls, and built another city (Mari III). When the Akkadian Empire fell (ca. 2150 BC) Mari again dominated North Mesopotamia. Finally to cut a long story short, Mari III was finally razed by Hammurabi in ca. 1758 BC. As was the case in many places, when he burnt the palace he inadvertently fired the tablets, preserved them for later excavation.

Above we have a satellite view of Mari (a World Heritage Site) in 2014, and we can compare it with an image taken in 2011 (below). What we can see (marked in red) are pit digs (there are 100's) made by ISIS (an unrecognised proto-state or band of terrorists). In the nearby Ancient Greek-Roman city of Dura-Europos, founding ca. 300 BC, nearly 4,000 pits have been counted.

Around 2200 BC, many city sites collapsed, a phenomenon that has been linked to the “4.2 kiloyear event”, a climatic anomaly visible in most palaeoclimatic records. Recent studies, however, suggest that some places were less affected than others, and that many agrarian societies in the North were capable of resilience in the face of climate change. Both the palaeobotanical and written records show a decrease (ca. 2000 BC) in drought-sensitive wheat and emmer, in favour of more stress-tolerant barley. In the Mesopotamian lowlands, these problems may also have been triggered by increasing salinisation of the soil caused by poor drainage. In any case it would appear that the crisis was far less dramatic in the South, where irrigation prevailed, than in the dry-farming North. The flourishing of Neo-Sumerian city-states such as Girsu/Lagaš, and the numerous administrative records, attest to the vitality of agriculture ca. 2100-2000 BC, especially in the time when the dynasties of Ur established hegemony over Lower Mesopotamia.

After the fall of the Ur III empire (ca. 2004 BC), Kings of Amorite descent took over Mesopotamia (ca. 2000-1600 BC). The Old Babylonian documentation is more detailed than any other, especially in Mari (ca. 2900-300 BC), where the union of both sedentary agriculturalists and nomad pastoralists under a common leadership offered an exceptional overview of tribal life and culture, as well as pastoral nomadism in the plateaux west and east of the Euphrates. The Mari archives document the cultivation of winter cereals (mostly barley) and pulses like broad bean, pea, and chickpea. Sesame and barley were the main summer field crops, and vines were grown hanging on trees in orchards that also contained fig, apple, pear, and pomegranate trees. Mari agriculture was dependent on irrigation, which required fetching the water of the Euphrates and Khabur several kilometres upstream in order to irrigate fields located on terraces above the river level. Some of the Mari fields were located directly on the valley floor and were farmed with small-scale irrigation, and they were directly exposed to the destructive spring floods of the rivers, which caused frequent harvest losses.

During ca. 2900-1700 BC Mari built two successive and independent irrigation systems, and each is said to have relied on a canal of some 20 to 30 km. In their texts such canals were called 'rakibum' or 'one which rides' suggesting that the canals ran over land using dikes. These canals were only used in the irrigation season and they did not have any villages along them. There are mentions of a canal of 120 km long dating back to ca. 2900 BC. Records also point to the existence of two canals, one for irrigation and the other a domestic water supply. There may also have been a navigation canal built more or less at the same time. This particular version of Mari was destroyed by Hammurabi in 1758 BC. This description is subtile different from the description mentioned earlier, and I've kept it to highlight the differences in understanding, interpretation and description between academics and archaeologists. One says 16 km another says 20 to 30 km, one says 'was' another says 'may'.

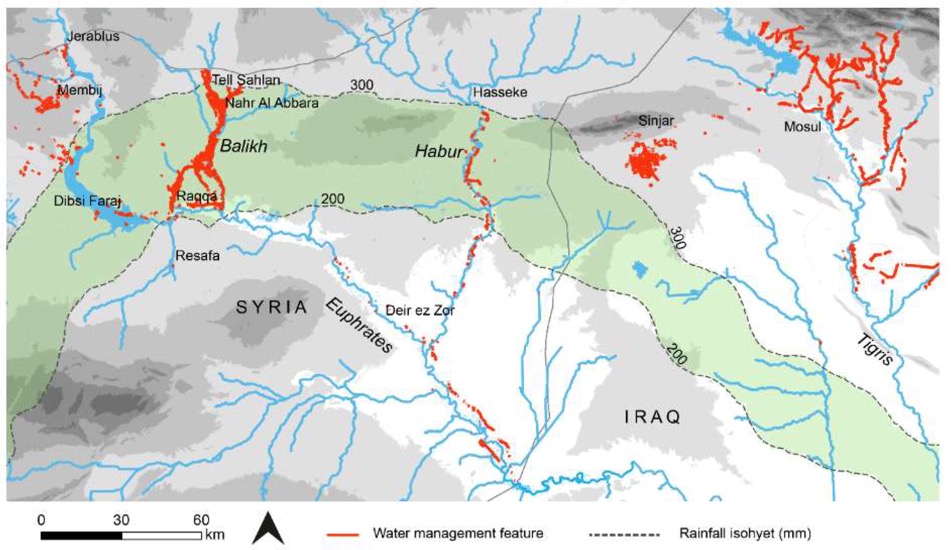

It is difficult to get a clear idea of the scale and effort that was put into building canals. But above we have a remote sensing view of about 100,000 km2. What they have done is map known archaeological features such as canals and irrigation areas dating up to ca. 570 AD (so including early Islamic features as well), and they have been able to isolate a total of 2,900 km of canals and qanats (underground channels) still visible.

A different analysis of satellite photographs still show today that there were over 6,000 km of tracks used to move food and resources to the urban centres in this region. This was the time when barley was the main crop, and there was at the same time a drastic reduction in wild species. Sheep and goats remained the most important livestock. Models show that a 'village' and its surrounding catchment area under cultivation could support around 2,500 people. A town of 6,000 to 8,000 would need six satellite villages within 5-10 kms to provide food for them. So you can see the importance of irrigation because a city with more than 15,000 people would be very susceptible to fluctuations in annual rainfall and could collapse if faced with a multi-year drought.

Contrary to contemporary Babylonia, where royal land was leased to independent entrepreneurs, the Mari farmers were palace employees who were expected to produce a quota of crops fixed in advance. The sowing season was determined by fluctuations of the river and the rising of a star opportunely called 'the Yoke' (Arcturus). Tasks of the growing season included maintenance of canals, weeding, irrigation, and protection against locust invasions and other pests, like birds or gazelles. Harvest occurred in spring, often in haste because of the risk of flooding. Grain was then carried away, threshed by oxen on threshing floors outside of the flood’s reach, and finally stored in granaries. When the harvest was not destroyed by war, locusts, or flood, this agriculture was very productive. Although absent from the middle Euphrates, date-palm was one of the major crops of Babylonia. Palm groves were exploited by specialists who leased them from private owners or the crown. According to Hammurabi’s laws, the planting of a palm grove required a three-year investment, during which time the palm shoots grew on the mother trees before being planted in an enclosed garden, watered, and cross-pollinated. Before harvest, the gardener was assigned a quota to be delivered to the landlord, often half or two-thirds of the unripened dates.

In 1595 BC, the Old Babylonian dynasty established by Hammurabi fell to a Hittite raid, and Mesopotamian history became more obscure. It would appear that ca. 1500 BC increasing aridity affected the region, and during the Kassite Period (ca. 1600–1150 BC) settlements became fragment and agriculture whilst remaining extensive became dispersed. The archives of Nippur show that irrigation was concentrated only in the south and east of the city, and 'water drawers' were in use (bucket irrigation). Horticulture was also found at Nippur and Ur, with gardeners producing spices (coriander and saffron) and dates. In the middle Euphrates, a series of large urban centres with strong communal institutions flourished using small-scale irrigation to cultivate cereal fields and orchards, especially vineyards, with a frequent mixture of crops and trees on the same parcel. Fortified farmsteads were an important feature of the rural landscape during the Mittanian and Assyrian periods. In the western Upper Mesopotamia, the middle Khabur was progressively transformed (ca. 1800-1300 BC) from a zone of small-scale irrigation agriculture around isolated cities into a full-fledged settlement system articulated on a regional canal. However judging from the archives the Assyrians (in the North) invested in irrigation to supplement their traditional dry farming, but that the results did not meet their expectations.

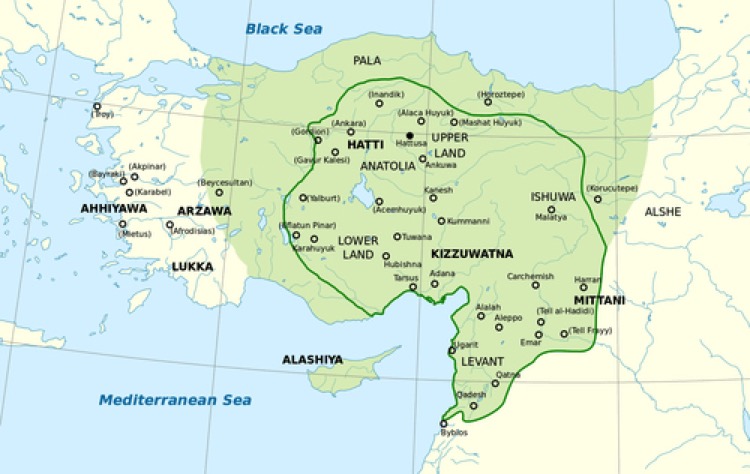

The Hittite Empire started to attack Mesopotamia ca. 1700 BC, and at their height they dominated Anatolia, northern Syria and northern Mesopotamia. They are known to have adopted both the religion and the legal system of the Sumerians, and above all they were among the first peoples to produce iron tools (ca. 1400 BC).

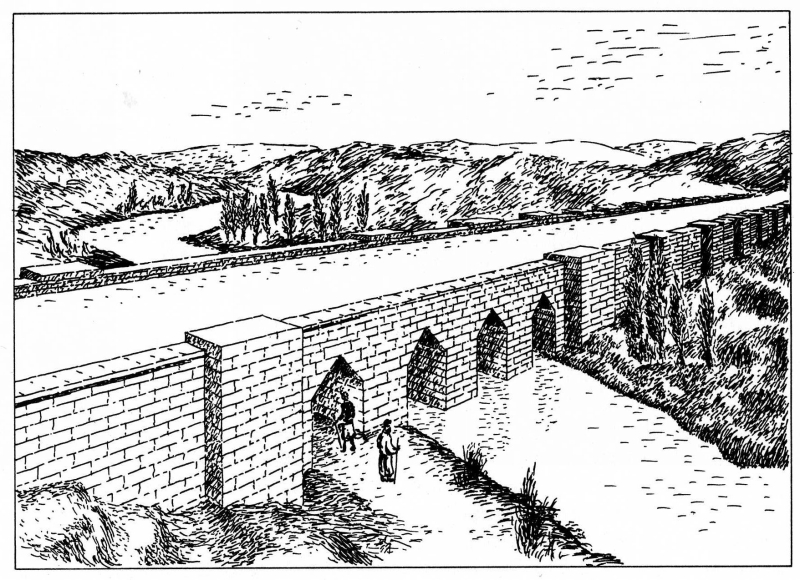

During the early Iron Age (1150–900 BC), aridity worsened, inciting social unrest and famine, which are recalled in Assyro-Babylonian literature and royal inscriptions. By ca. 950 BC, however, improved precipitation renewed Assyrian power allowed for the political expansion of the Neo-Assyrian Empire (911–612 BC). Regional surveys show, from the Upper Tigris valley to the Turkish Euphrates, the multiplication of dispersed, small rural sites that can be identified with the hamlets mentioned in a cuneiform tablet recording the census of the district in ca. 700 BC. This was coupled with agricultural intensification relying both on dry farming and on large-scale irrigation canals around the capitals of Nimrud, Dur-Sharrukin, and Nineveh. A spectacular feature of this irrigation system was the Jerwan Aqueduct, built under Sennacherib (705–681 BC).

This aqueduct was build with more than 2 million dressed stones and used stone arches and waterproof cement. It could be the worlds oldest aqueduct, and it certainly predates Roman aqueducts by at least 500 years.

Cuneiform records from Babylonia from ca. 1000 BC suggest that changes in river courses required heavy investments in irrigation and were accompanied by a re-allocation of fields under royal order. Previously uncultivated land was reclaimed and allocated to different social groups in a form of land tenure that is attested until the early Achaemenid times (ca. 550-330 BC). These innovations were part of a series of technological changes at the transition between the Bronze and Iron Ages, which saw the widespread adoption of iron tools, especially plowshares.

We have seen that it is quite possible that the first attempts at controlling water in the region was to mitigated the effects of destructive episodic flooding and protect already planted fields. On top of all that, the very small incline of the alluvial plain in the South and the fine texture of the soil easily gave way to waterlogging and salinisation, i.e. lack of natural drainage. But the local communities were successful in practicing what is called irrigated basin agriculture (perhaps ca. 5000 BC), just by alternative-year fallowing to allow the water table to fall after harvest. Wild plants would take over during the fallow year and foster evapotranspiration. The process uses wild plants to draw moisture from the water table, drying out the subsoil, thus preventing the water from rising and bringing salt to the surface. These wild plants also add nitrogen, and help prevent erosion of topsoil. When the field is again planted and irrigated, the dryness of the subsoil allows the irrigation water to leach salt from the surface and carry it down to the water table. However, with time the top soil does become increasingly saline. In fact we know that Mesopotamian farmers would abandon lands for period of between 50 and 100 years to allow them to recover.

We also know that Mesopotamians practiced joint tenure of the land, helping both to prevent plots becoming too small to support fallowing, and also preventing plots being isolated from irrigation water. On top of all that, we also know that marriages between farmers and nomadic groups allowed farmers to revert to pastoral nomadism during hard times, i.e. livestock was an insurance against drought. Experts think that the traditions of hospitality and mutual obligations derive from these earlier traditions, e.g. cooperation to dig, clean and maintain irrigation canals. Experts also think that these traditions would have stopped people acquiring excessive wealth and power. Leaders would have been “first among equals”, but any real power would have been through traditional family links. Of course, as pastoral nomadism disappeared and villages developed into cities, kin-based communities were replaced by residentially-based forms of social identification (enabling new political, religious and economic institutions).

Early irrigation practices did not evolve substantially, but ca. 3000 BC irrigation was rapidly extended, presumable to satisfy a rapidly expanding urban society (one set of canals from ca. 2500 BC has been estimate at 35 km long). Early writings tend to focus more on navigability than on irrigation for farming. A major canal built ca. 2400 BC actually provoked flooding and vast areas became choked with salt. But it was even earlier (ca. 3500 BC) that cultivators had started to shift from salt-sensitive wheat to salt-tolerant barley, and by ca. 2100 BC wheat represented only 2% of crops (wheat had disappeared by ca. 1700 BC).

Irrigation water in the region is dominated by calcium and magnesium cations, with the addition of some sodium. As the water evaporates and transpires the calcium and magnesium tend to precipitate as carbonates, leaving the sodium ions dominant in the soil. If not washed away, these sodium ions are absorbed by colloidal clay particles, deflocculating them and leaving the resultant structureless soil almost impermeable to water, impeding future plant gemination. Salt will accumulate, making the ground water very saline. New water, and poor drainage, lifts the water table and the dissolved salts rise into the root zone near or on the surface.

Salinisation meant a decline in soil fertility. Reports show that over a 300 year period (ca. 2400-2100 BC) yields dropped by 50%. Some experts have suggested that this was the reason why many cities were finally abandoned, others are not willing to commit to such a single, dramatic explanation. A few experts suggest that inefficiencies and corruption of the bureaucrats that took over and maintained the irrigation canals were to blame. What is certain is that smallish, independent principalities (the principle social unit at the time) fought over fertile boarder districts. Breaching and obstructing branch canals were common practices. Large irrigation canals were build to stop these conflicts, but they also produced flooding and over-irrigation, producing a rise in the ground water level, and salt pollution of the land. Sporadic salinity started to occur in ca. 2400 BC, moving to barley was completed by ca. 1700 BC, but by then yields had also dropped by more than two-thirds. During this time many of the great Sumerian cities had dwindled to villages, and the power in the region had moved to Babylon. And we should not forget that the region was an alluvial plain, so silting was a constant problem in irrigation systems, and even more so in the larger canals. We know that agricultural life settled near or on the irrigation network, starting with the Ubaid, ca. 4000 BC. The signs are that water was drawn only short distances from the main watercourses, and silt would not have been a major problem. The short branch canals could be cleaned easily or even replaced. There are also signs that these smaller settlements were occasionally abandoned, probably for socio-political reasons (nice way of saying conflicts and wars) rather than natural ones, which actually allowed the lands to recover naturally. In a later period the land and water resources were almost completely exploited, certainly true in the Parthian Empire (ca. 247 BC to 224 AD). But even in the post-Ubaid empires, population pressures was such that long branch canals were built, but because of their small cross section and small slopes they tended to fill rapidly with silt. Such irrigation networks needed both periodic reconstruction and continuous maintenance. Clearly such an irrigation network is a fantastic asset, provided a strong central authority is committed to its maintenance. But in times of social unrest, small local communities would have been incapable of maintaining the system.

We think of the Tigris and Euphrates as being massive rivers that must be viewed as a vast resource flowing into the Persian Gulf. But to people living in the delta their whole lives depended upon an elaborate system of canals and levees (dikes). And in ca. 2600 BC this network depended on inter-community cooperation between a loose alliance of small adjoining Sumerian city-states (south of what would become Babylon). One of these cooperation agreements between Lagash and Umma concerned a valley irrigated by a canal. Umma cultivated some land under lease paying an annual fee to cover the costs of canal maintenance. They refused to pay, hostilities broke out and part of the canal was destroyed. Lagash finally defeated Umma forcing them to pay for repairs (and an extension). This was the first known conflict over water and the treaty the oldest on record (2550 BC). Here is a quick summary.

The Sumerians introduced irrigation and navigation canals, and by ca. 3000 BC more than 25 square kilometres were irrigated. The network included dams, canals, and weirs and reservoirs, with the main canals lined with burned brick and joints sealed with bitumen (and large dykes strengthened by layers of reed mats). We have to remember that by ca. 2500 BC the population of Sumer was ca. 500,000 with about 80% living in the cities, already in ca. 2700 BC the city of Uruk had a population in excess of 50,000 people. So agriculture, and irrigation on an industrial scale, was already essential to a populations well-being nearly 5,000 years ago.

Mesopotamia is not the only place were a sophisticated water culture evolved. Mohenjo-daro in the Indus Valley, one of the world’s earliest major urban settlements ca. 2500 BC, developed into a city of over 40,000 people. We know that dwellings had bathrooms and latrines and that street drainage channels were lined with bricks and flanged terra-cotta pipes of standardised dimensions. They also had large bathing areas, supporting the idea that ritualised cleansing was already important in their culture. Certainly between ca. 3200-2600 BC they also developed irrigation, drainage canals and embankments to control floods and protect crops and settlements. We also know that ca. 2200 BC the Chinese were building dikes, canals, and reservoirs as part of large-scale flood-control projects.

Experts think that in all cultures, irrigated agriculture leads to being ruled by an authoritarian elite, and the more they depended upon irrigation the less they were democratic. The basis is that landed elites in arid areas monopolise water and thus arable land, extracting monopolistic rents from peasants reducing them to virtual slavery, and favouring oppressive institutions. With control over water, yields are predictable (we know the Babylonians predicted crop yields), monitoring is not needed, and punishment can be promised based upon low crop yields. The elite protected their privileges through oppressive regimes, and exerted considerable influence over the bureaucracy. They educated their children so that they could reach high ranks in the bureaucracy, leading to its domination by a landed elite. This is true today in places like Pakistan, was true Europe in the Middle Ages, and was also true in ancient Mesopotamia.

And as the “icing on the cake” the Babylonian Creation Myth has as its highpoint a battle between Marduk, the principle god of Babylon, and Tiamat, a primordial goddess of the ocean and personification of sea salt. At least one interpretation has her as creator goddess who joins with Abzû (the god of fresh water), in a marriage between salt and fresh water, to create the cosmos and produces younger gods. The story goes that Marduk defeats Tiamat, and in doing so creates the Earth and the skies (including the planets, stars, the Moon, the Sun, and the weather). He also puts the other gods to work in the fields, but when he destroys Tiamat’s husband, Kingu, he can then use his blood to create humankind to do the work of the gods.

Technologies of irrigation

Firstly we must note that water is not just about irrigation. You have drinking water, you have marshes and wetlands, and rivers are also bulk transport routes. On top of that water provides food resources (fish), economic resources (reeds), as well as often being sacred (and not forgetting 'waste removal').

As is usual when looking at a 'complex' topic such as irrigation we tend to forget the even simpler and more basic technologies, such as well digging. In Tell Seker al-Aheimar (in modern-day northeast Syria) they have already found Neolithic pottery that might date from the middle of the Pre-Pottery Neolithic B period (c. 6800-6700 BC). The settlement appeared to comprise extensive multi-room mud-brick buildings and gypsum-plastered floors, with indoor storage, processing spaces, fireplaces, and livestock enclosures. And an unusually large (14 cm high) bi-chrome painted clay seated female figurine of high artistry has also been excavated. However in our context what is important is that they also found a 4 m deep water well dating to about 7000 BC. This might be the oldest well dug to gain access to a clean water source. Experts have noted ritual traces associated with the well.

Early settlements were situated near springs and rivers, etc. and whilst they did not know about pathogens transmitted in contaminated water they certainly knew that pure water was a prerequisite for successful urbanisation and state formation. So man built water wells at least 7,000-8,000 years ago, and that by ca. 6000 BC spring water was being used to feed fields using small stone channels, and that by ca. 4000 BC dikes and terra-cotta drainage channels were being used to protect crops from flooding.

Cuneiform tablets provide a lot of administrative information and mathematical texts looked at question such as how much land could be irrigated to a particular depth from a cistern of specific dimensions. Then there were the volumes of earth that had to be removed in making water courses, and what that earth represented when piled up. Oddly there is not much on water management, nor on irrigation accounts or calendars. Often cunieform texts don't distinguish between natural rivers and artificial canals, and there is little information on the size and layout of the irrigation network. However there are some texts that hint at the extensive nature of irrigation networks. Tablets list the variety of classes of canals, with their different names. Starting with a major river or canal (naru) flowing down a gentle slope, you would create a secondary canal (namkaru) and give it a smaller slop than the main naru. After some distance you would dig the canal but you would build it up over the ground using dikes, from which you could derive a new namkaru.

Today satellite images can help identify features and point to where irrigation might have been useful. And you have information on what the crops might have been, and this can suggest if irrigation would have been necessary at that place.

Irrigation has usually been associated with Lower (South) Mesopotamia, where the land is flat, the rivers meander, and where the most common irrigation technology was the natural or manmade levees (dyke, embankment). In fact natural dykes are created by sand and silt deposits as the river meanders down to the sea. More importantly this preferential deposition of sand created over time a situation where the rivers were actually riding 1 or 2 meters higher than the surrounding land. This made it easier to dig irrigation channels leading down the levee rather than follow the very shallow gradient of the plain. This 'ideal' situation was marred by the tendency of rivers to burst their banks and create new channels, leaving some settlements without water, but creating new opportunities for settlement.

The annual flood cycle in Mesopotamia was poorly synchronised with the needs of cultivators. In contrast to the Nile, whose monsoon-driven annual flood well matched the needs of irrigation, the annual flood of both the Tigris and the Euphrates peaks in April and May as a result of winter rainfall and spring snowmelt on the mountains of Anatolia, Iran and Iraq. This not only threatened the ripening grain crops, but also required that considerable efforts be made to protect the fields from flooding. So the idea that the Sumerians actually expended more effort on flood control than in the distribution of water for irrigation may be correct. In fact most Ur texts dealing with earth moving refer to the construction of embankments (ég) and the need to protect the crops ready for harvest from the river’s high waters, and to prevent the flooding of towns and fields.

The mismatch between the annual flood and the eventual development of large-scale irrigation is perplexing because it makes it difficult to understand how the early phases of irrigation developed. Some experts suggest that initial irrigation was a form of flood recession agriculture that took place in the lower flood basins following the retreat of the annual flood waters. Although simple irrigation could subsequently have developed out of small natural overflows and levee breaks, which provided the locus for more organised irrigation, these could only operate during the spring floods when the water level was at its peak, which was not the correct time for the irrigation of cereals. Moreover, by discharging excess water at weak points on the river bank, these crevices could be enlarged by the river thereby encouraging channel breaks and even channel shifts or avulsions. On the other hand, spring and early summer floods would have benefited the palm gardens that require copious water, especially during the hot summer months and which must have been a very important crop during the 3rd millennium BC. Because date gardens occupy the levee crests, this cycle of summer irrigation would have been in keeping with the natural ecology of the region. This spring and early summer flood would also have provided the appropriate soil water to initiate cereal crops’ growth in the autumn. As the need for cereals grew during the later stages of the Ubaid and Uruk periods, irrigation channels could have been extended down levee to more distant fields that would progressively withdraw more water during the lower phases of the flood cycle. Such an evolutionary model of progressive development away from a riverine belt of palm gardens would fit the model where palm garden oases formed the primary focus of cultivation together with lower storeys of plants within the shade. The levee-crest garden agriculture of southern Mesopotamia would have complemented the wetland resources and provide the formative stage of Sumerian agriculture.

So we have seen the first (and second) of Mesopotamian 'technological inventions', levees and canals (and later furrow irrigation). We know that large dykes were strengthened by layers of reed mats, and that some later canals were lined with burned brick and the joints sealed with bitumen.

And there was, of course, crops domestication followed by domesticated animals (and later animal traction and not forgetting the yoke which may date back to ca. 4000 BC).

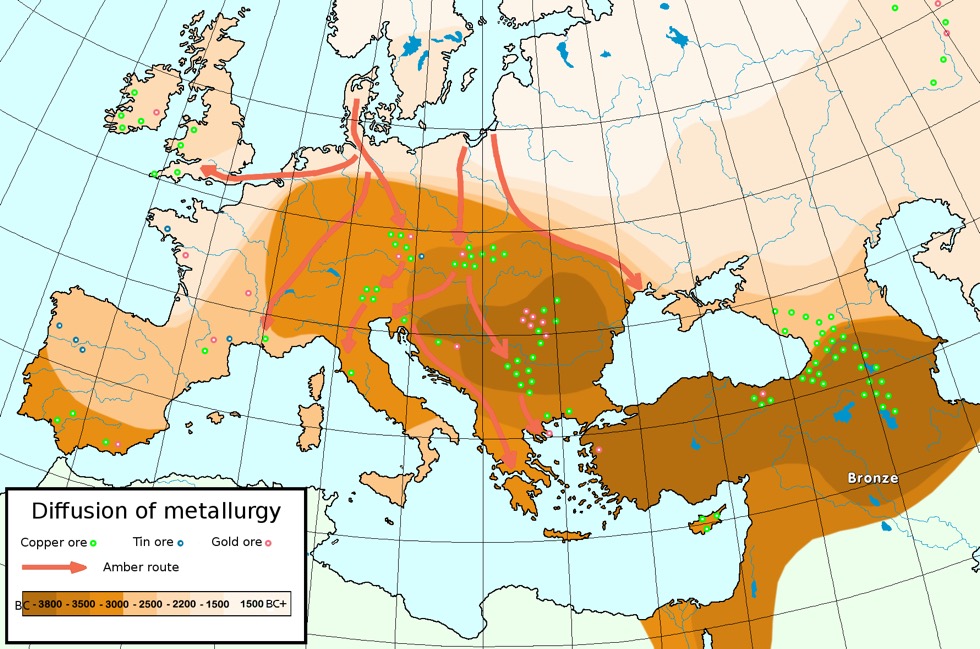

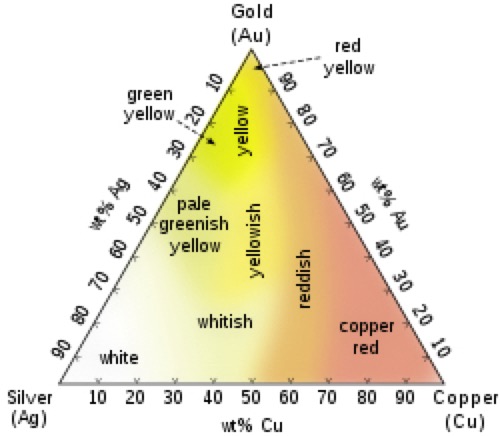

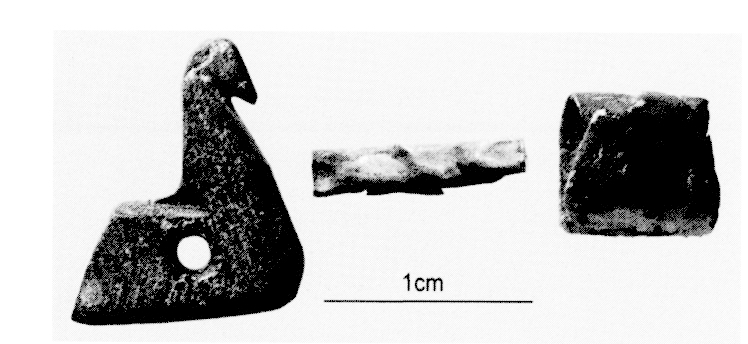

The move from stone sickles to low-cost clay sickles, and later to copper sickles is claimed by some experts to have been the most important Mesopotamian technical evolution since it had the greatest effect on agricultural productivity, and thus on health and well-being of millions of people. Stone vessels became a variety of pottery vessels for carrying and storing food and liquids. The hoe was an early development, then came the ard or scratch plow (see also hoe farming), and then the mattock. Later the seeder plow arrived, and then the threshing sledge.