Cambridge Analytica & Facebook

last update: 19 Nov. 2019

These are some recent links to articles that have not yet been integrated into this story:

The NSA confirms it: Russia hacked French election 'infrastructure', Wired, 9 May 2017

Conservative Twitter is freaking out over a reported bot purge, The Verge, 21 Feb. 2018

The Era of Fake Video Begins, The Atlantic, 8 April 2018

Mark Zuckerberg: “We do not sell data to advertisers”, TechCrunch, 10 April 2018

Transcript of Mark Zuckerberg’s Senate hearing, The Washington Post, 10 April 2018

Zuckerberg struggles to name a single Facebook competitor, The Verge, 10 April 2018

Five things the world finally realises about Facebook, Quartz, 11 April 2018

How To Avoid Being Tracked Online, Gizmodo, 11 April 2018

Mark Zuckerberg vows to fight election meddling in marathon Senate grilling, The Guardian, 11 April 2018

3 more claims that point to Vote Leave cheating, INFacts, 13 April 2018

Facebook to ask everyone to accept being tracked so they can keep using it, The Independent, 18 April 2018

Facebook to exclude billions from European privacy laws, BBC News, 19 April 2018

'Facebook in PR crisis mode', says academic at heart of row, BBC News, 24 April 2018

Twitter sold data to Cambridge Analytica - data sales account for 13% of revenue, Flipboard, 30 April 2018

Cambridge Analytica's Major players: Where are they now? Fast Company, 2 May 2018

Cambridge Analytica shutting down after Facebook data scandal, MacRumors, 3 May 2018

Facebook, Twitter, and Google would like to be the Gatekeepers of Democracy, without the responsibility, Gizmodo, 4 May 2018

Tech watchdogs call on Facebook and Google for transparency around censored content, TechCrunch, 7 May 2018

Congress releases all 3,000-plus Facebook ads bought by Russians, c|net, 10 May 2018

Facebook has suspended around 200 apps so far in data misuse investigation, The Verge, 14 May 2018

The DOJ and FBI are now reportedly investigating Cambridge Analytica, Gizmodo, 15 May 2018

Facebook details scale of abuse on its site, BBC News, 15 May 2018

It would appear that Facebook employs 15,000 human moderators, but that its algorithms struggle to spot some types of abuse. Apparently they detect 99.5% of terrorist propaganda, but only 38% of hate speech. Facebook also said it had in a 3 month period taken down 583 million fake accounts.

Cambridge Analytica whistleblower warns of 'new Cold War' online, Politico, 16 May 2018

This article from Politico could merit an extended development in that it would appear that Cambridge Analytica had close links with both Julian Assange, founder of Wikileaks, and that they used "Russian researchers, shared information with Russian companies and executives tied to the Russian intelligence".

Scandal-ridden Cambridge Analytica is gone but its staffers are hard at work again, Quartz, 16 June 2018

Data Propria (see also this Wired article from 29 May, 2018, on where staff from Cambridge Analytica have landed)

Facebook, Twitter are designed to act like 'behavioural cocaine', cnet, 4 July 2018

Facebook scandal: Who is selling your personal data? BBC, 11 July 2018

Article looks at role of data brokers such as Acxiom and Experian.

Four intriguing lines in Mueller indictment, BBC, 13 July 2018

This article mentions DCLeaks and Guccifer 2.0 as being fronts for Russian military intelligence, and famous "Russian if your listening…" comment of Trump.

Twelve Russians charged with US 2016 election hack, BBC, 13 July 2018

Donald Trump is the biggest spender of political ads on facebook, The Wrap, 18 July 2018

He has spent $274,000 on ads in a 2-3 month period, thats 9,500 ads in 2 months.

Inside Bannon's Plan to Hijack Europe for the Far-Right, Daily Beast, 20 July 2018

It would appear that Bannon wants to set-up a European foundation called The Movement to help right-wind populist parties.

Hackers 'targeting US mid-term elections', BBC, 20 July 2018

Three candidates have already been attacked using domains known as ''Fancy Bear'.

https://www.bbc.com/news/technology-64075067

Give the complexity of the topic treated in these pages what I’ve decided to do is to add here six videos. They can be viewed before reading the text as a way to pre-brief yourself, or you can wait to view them within the context of the text itself.

Start with the keynote The End of Privacy by Dr. Kosinski (March 2017), and then have a look at The Power of Big Data and Psychographics by Mr. Alexander Nix of Cambridge Analytica (Sept. 2016). Then we have Cambridge Analytica explains how the Trump campaign worked (May 2017). Now turn to The Guardian interview (March 2018) with Christopher Wylie, the Cambridge Analytica whistleblower, giving evidence to UK MP’s (March 2018). And finally spend a moment to listen to Steve Bannon on Cambridge Analytica (March 2018).

Be warned, this story is as much about ‘fake news’, disinformation, psychometrics, Facebook and its ‘social graph’, and privacy, as it is about Cambridge Analytica.

During March-April 2018 the Cambridge Analytica - Facebook story often occupied the front pages of the mainstream press. Perhaps because of the technical nature of the story, perhaps because of time and space constraints, many of the details were mashed together or just overlooked. Coverage in the specialist press was more complete, but again not always perfectly tied together. My interest has always been in the technical aspects, but this story is in many ways more about social engineering and modern but opaque business practices.

In any case I have tried here to capture the complete story, but one still can’t be sure that all the facts are out in the open (or even which are fact and which fiction). In addition I have tried to add some recent contextual elements, and even some elements that may or may not prove with time to be important. I am certain that my text will occasionally look longwinded and even overly detailed. I’m sorry for that, but I found it very difficult to “see the wood for the trees”, particularly when you don’t really know which trees are important anyway.

Here goes!

Setting the scene

Lets start at the beginning, Over the past 18-24 months numerous articles have appeared about people (read Russians) trying (and maybe succeeding) to influence or manipulate the US presidential elections and the ‘BREXIT’ referendum in the UK. But the reality is they have been active all over Europe.

Recently in the Italian national elections 2018

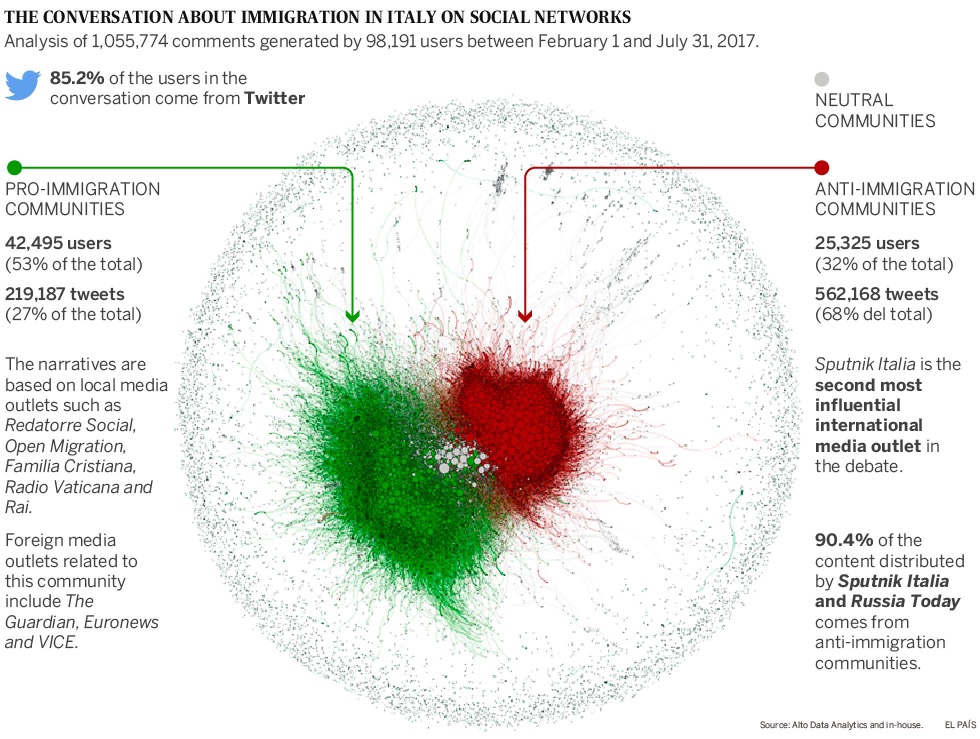

Recently the pro-European, centre-left El País ran an article about how Kremlin-backed media outlets promoted a xenophobic discourse during the 2018 Italian national elections.

This was presented in the specialist press as a perfect example of modern-days disinformation. Anti-immigration and anti-NGO activists shared stories published by RT and Sputnik, Russian government controlled news platforms (see this US Intelligence Report). The key message was that Italy had been invaded by refugees, and they were to blame for unemployment and inflation. And that European politicians were ultimately responsible.

You may think that this type of blatant disinformation will not work. However an analysis of more than 3,000 news sources over a 6-month period in 2017 showed that Sputnik was the second most influential foreign media source operating in Italy, after the liberal-inclined The Huffington Post. Sputnik was helped by a series of bots (more precisely bot farms) that continuously re-published anti-immigration comments.

The technique is simple (and clearly effective) and involves using questionable sources, biased experts and sensationalist headlines, all shared across 1,000’s of social network accounts. The aim, to make the content go viral, and thus to amplify the perception of the problem. Sputnik provided the content which was then shared by profiles who regularly disseminated anti-immigration messages. Immigration was linked to insecurity, crime and terrorism.

The El País article explained that Russia cultivated both ends of the political spectrum in Italy. The aim is to break the trade embargoes against Russia and “foster cooperation in areas of security, defence of traditional values and economic cooperation”, which is another way to say weaken existing EU, NATO and transatlantic alliances. These ideas have also been expressed by right-wing political parties in France, Hungary and Austria, and by the far-left in Greece.

We must remember that disinformation, as used by the Russians in Italy, reinforces misperceptions and misunderstandings, provokes and exploits existing problems, and generally engenders social disagreement and disaccord. Italy had, many would say still has "38% youth unemployment, a stagnating economy, a record debt load, endemic corruption, rising political apathy and dissatisfaction with traditional political parties, and a problem of immigration". Disinformation did not create these problems, it exploited them, it spun and twisted them, and it tried to foster an unhealthy and distorted debate on how to address them. I might add that the above quote came from an article in the very reputable sounding Strategic Culture Foundation ("focussing on hidden aspects of international politics and unconventional thinking"), which WikiSpooks defines as a Moscow-based anti-Zionist think tank and web publishing organisation.

At the end of the day who can you believe? Which media sources can be trusted? Malcolm X once said that the (mainstream) media controlled the minds of the masses but today the media can't decide whether they are in the business of reporting news or manufacturing propaganda, and frankly most people don't understand the difference. I can't remember who first said it but today both the mainstream media and social networks are in the business of "liquidification of meaning", and there are fewer and fewer solid "islands of truth" left.

Before that in the Catalonian independence referendum 2017

It is claimed that the same technique was used in the recent illegal referendum in Catalonia. Pro-independence messages and fake news were propagated by Russian news sources, which were then amplified via 10,000’s of profiles on social networks (including 1,000’s of profiles with Venezuelan accounts). There is evidence that this type of social conversation can sway public opinion. This disinformation appeared in the days preceding the referendum, and the Spanish State, the traditional political parties, and the usual mass-market media outlets did not manage to create an effective reply. In the days prior to the referendum nearly 80% of messages on social media defended the independence of Catalonia (it was estimated that more than 80% of those massages were from false profiles or bots). The message was clear, the Spanish police acted violently against peaceful Catalan citizens, and the Spanish State was a repressive Franco-like regime. It has been estimated that the combined messages of the two Russian State media outlets (and including the Russian linked Spanish language El Espía Digital) were 10 times more influential that both the centre-right El Mundo and Catalonia’s leading newspaper La Vanguardia. Yet Putin is quoted as saying that Catalan independence is “an internal matter for Spain”. The key is again is to sow dissent and discord by playing both sides, possibly with the longer term objective to see a strong Spanish far-right party emerge along the lines of VOX. There was also an undercurrent in the separatist media that the results of the Catalan referendum would legitimised the annexation/self-determination of the Crimean peninsula. And to top it all some of the pro-independence online activity came out of the US. David Knight on the 'fake news' pro-Trump InfoWars claimed that 700 mayors had been arrested in Spain and that the Spanish government was preparing to invade Catalonia by sea (he also criticised Trump for not supporting Catalan self-determination). The conservative pro-Trump Drudge Report also promoted Catalan independence to their 19 million subscribers. There was even a report that Russian hackers helped keep open census websites for the Catalan regional government.

Again disinformation did not create the strong ethnopolitical polarisation between Catalonia and Spain, it just tried to reinforce it and exploit it.

And before that in the US Presidential campaign 2016

Without dwelling too much on the recent US Presidential campaign Russian interference used the same basic approach. Sources were found, or created, that reinforced a particular claim or position. Those sources were given headline space, and re-published, and then often picked up by news services around the world. A stream of interactions on Facebook and Twitter convinced people about the truth, or at least newsworthiness of the sources. No one checked to see if the Pope had really said that US Catholics should vote to make America strong and free again. No one checked to see that 70% or 80% of the traffic on Facebook and Twitter came out of bot farms. Yet during the elections 60% of Americans claimed to use Facebook to help keep themselves informed. At that time identifying ‘newsworthy’ items on Facebooks was largely driven by algorithms that rewarded reader engagement above all else, and were thus almost designed to help make certain sensationalist news items go viral.

Through 2017 Facebook now employs more than 7,500 people to monitor video and news streams that are reported as inappropriate by their users (YouTube has 10,000 people working on this). However the ‘content moderators’ rightly prioritise pornography, terrorism, violence, and abnormal/Illegal behaviour, rather than ‘fake news’. But even then there are abundant examples of inappropriate content remaining on social networks for months before finally being taken down. YouTube has said it is using machine learning algorithms to help its content moderators to remove around 300,000 videos per year just in the category ‘violent extremism’. But most experts think that this is just ‘the tip of the iceberg'.

This story is about Cambridge Analytica and Facebook, but what exactly is Facebook?

If you read Wikipedia it tells us that Facebook is a social networking service. Zuckerberg tells us “people come to Facebook, Instagram, Whatsapp, and Messenger about 100 billion times a day to share a piece of content or a message with a specific set of people”. Some experts tell us that Facebook could easily rank as the largest online publisher in the world, and it’s advertising-driven for-profit perspective is no different from any of its mass media predecessors. Others might say that with its “f” everywhere it is a vast branded utility, serving ‘useful content’ rather than litres or kWatts. Yet others think of Facebook as a kind of closed World Wide Web designed to make as much money as possible. A few people have even suggested that it is similar to the cigarette industry, in that it is both addictive and bad for you. A more sensible idea, given that Facebook serves one in four of every ad displayed on the Web, is to consider it an advertising company. Facebook's advertising revenue was over $9 billion for Q2 2017, and they capture 19% of the $70 billion spent annually on mobile advertising worldwide.

Facebook has more than 1.3 billion daily active users, and more importantly over 1 billion mobile daily active users (85% of them outside the US and Canada). This is important because mobile advertising revenue represents 88% of their total advertising revenue. Mobile users access Facebook on average 8 time per day, and oddly enough brands post something new or updates an average of 8 times per day. The ‘like’ and ’share’ buttons are viewed across more than 10 million websites daily. People spend more than 20 minutes a day on Facebook, upload well in excess of 350 million photographs daily (adding to the 250 billion already uploaded), and post in excess of 500,000 comments every minute. Daily well over 5 billion items are shared, and over 8 billion videos are viewed, however the average viewing time per video is only 3 seconds. You often hear that Facebook is for old people, but 83% of users worldwide are under 45 years old, and men 18-24 make up the biggest sector of users (18%). Watching videos on mobile devices appears to have the highest engagement rate, but 85% of users watch with the sound off!

The average Facebook user has 155 friends, but would trust only four of them in a crisis. On the other hand 57% of users say social media influences their shopping habits. The average click-through rate for Facebook ads across all sectors is 0.9%, and oddly enough the highest click-through is in the legal sector and the lowest in employment and job training. Pay-per-click, the amount paid to Facebook by advertisers, is highest for financial services and insurance ($3.77) and lowest for clothing ($0.45), the average is $1.72.

There is an old adage that if you are not paying for it, you are the product. This is nowhere more true than with Facebook. US and Canadian users are the most valuable to Facebook, each generating on average over $26 revenue in Q4 2017, just from advertising. For the US and Canada it was $85 annually per regular user for 2017, but for Europe it was only about $27 annually.

At the end of 2017 Facebook was using 12 server centres, 9 in the US and 3 in Europe (2 more are planned for the US). They are the 3rd busiest site on the Internet (after Google and YouTube). I understand that Facebook started by leasing ‘wholesale’ data centre space, but they now own at least 6 server farms. At the end of 2015 Facebook reported that it owned about $3.6 billion in network equipment, and spent about $2.5 billion on data centres, servers, network infrastructure and offices. In 2013 it was estimated that each Facebook data centre was home to 20,000 to 26,000 servers, but I have seen figures quoted for new data centres with 30,000 to 50,000 servers. Facebook does not publish the total number of servers they run, but estimates have been made based on the surface areas (5,000 servers per 10,000 sq.ft) and on the power and water consumption of the server farms, and the figure could be anything between 300,000 to 400,000. The storage capacity of Facebook data centres has been estimated at 250 petabytes.

For the more technical minded reader Facebook created BigPipe to dynamically serve pages faster, Haystack to efficiently store billions of photos, Unicorn for searching the social graph, TAO for storing graph information, Peregrine for querying, and MysteryMachine to help with end-to-end performance analysis. And if you are a real techno-fanatic have a look at Measuring and improving network performance (2014) which will give you and insight into the challenges in providing the Facebook app to everyone, everywhere, all the time.

Facebook and ‘fake news'

Facebook has been criticised for failing to do enough to prevent it being misused for ‘fake news’, Russian election interference and hate speech. Today each time someone from Facebook talks about ‘fake news’ they mention artificial intelligence. Some types of image recognition are already being used, e.g. using face recognition to suggest a friends name in a picture. Already in 2014 they reported a more than 97% accuracy. The move today is to so-called ‘deep learning’ where the systems are taught to recognise similarities and differences in areas such a speech recognition, image recognition, natural language processing, and user profiling. Check out Facebook's page and videos here. This is not only about identifying people in photographs or videos, but about also understanding what they are doing, and identifying surrounding objects. They are also looking at language understanding, the use of slang, and the use of words with multiple meanings. The up-front claim is that this will help identify offensive content, filter out click-bait, and identify propaganda, etc. But at the same time it will help better match advertisers to users and identify trending topics, what Facebook calls “improving the user experience”.

Behind their sales pitch on artificial intelligence, Facebook are actually re-developing their hardware and software infrastructure to support machine learning. Pushing a sizeable portion of their data and workload through machine learning pipelines implies both a GPU and CPU infrastructure for processing training data, and the need for an abundant CPU capacity for real-time inference. In practice machine learning is used for ranking posts for news feeds, spam detection, face detection and recognition, speech recognition and text translation, and photo and real-time video classification. And not forgetting the need to determine (predict) which ad to display to a given user based upon user traits, user context, previous interactions, and advertisement attributes.

Facebook, an example of surveillance capitalism

We should never forget that Facebook solicits social behaviours, monitors those behaviours, maps social interactions (socio-mapping), and resells what they learn to advertisers (and others). This is the definition of surveillance capitalism. Authors on this topic have a nice way of introducing the topic with “Governments monitor a small number of people by court order. Google monitors everyone else”. In this form of capitalism, human nature is the free raw material. The ’tools of production’ are machine learning, artificial intelligence, and algorithms for big data. The ‘manufacturing process’ is the conversion of user behaviour into prediction products, which are then sold into a kind of market that trades exclusively in future behaviour. Better predictions are the product, they lower the risks for sellers and buyers, and thus increase the volume of sales. Once big profits were in products and services, then big profits came from speculation, now big profits come from surveillance, the market for future behaviour. We should not forget that behaviour is not limited to human behaviour - bodies, things, processes, places, … all have a behaviour that can be packaged and sold. But what of the rights of the individual to identity, privacy, etc.? The surveillance economy does not erode those rights, it redistributes them. Capital, assets, and rights are redistributed, and some people will have more rights, other less, creating a new dimension of social inequality. Do we have a system of consent for this new type of capitalism? Is there a democratic oversight of surveillance capitalism, in the form of laws and regulations?

Farfetched, maybe, but some experts see ‘automotive telematics’ as the next Google and another perfect example of surveillance capitalism. Automotive data can be used for dynamic real-time behaviour modification, triggering punishment (real-time rate hikes, financial penalties and fines, curfews, engine lock-downs) or rewards (rate discounts, coupons, points to redeem for future benefits). Insurance companies will be able to monetise customer driving data in the same way Google sells search activity. What Google, Twitter, Facebook, etc. do (and what everyone else wants to do) is sell access to the real-time flow of peoples daily lives, and better still modify peoples behaviour, for profit. If you think surveillance capitalism farfetched, have a look at Social Media Surveillance: Who is Doing It?, and if you still don’t believe it check out the “logical conclusion of the Internet of Things”.

Facebook's ‘social graph'

Facebook’s core tool for surveillance capitalism is its social graph. Here is a technical video presentation on the social graph, and here is a more business oriented presentation on the same topic (both from 2013). The social graph is often presented as one of the 3 pillars of Facebook, along with News Feed and the Timeline. In simple terms a social graph is composed of people, their friendships, subscriptions, likes, posts, and all the other types of logical connections. However for Facebook this means real world graphs at the scale of hundreds of billions of edges (an ‘edge’ is just a link or line between two ‘nodes’ in a graph). Below we can see just the ‘edges’ or lines drawn (relationships) between one particular user and their top 100 Facebook ‘friends’.

So the social graph actually represents a persons online identity, and of course Facebook maintains the worlds largest social graph. Naturally Facebook sees your social graph as a commercial asset, and they do not allow users to ‘export’ it to an alternative social network. In the past there were apps that mapped a persons social graph, but many have now been dis-activated. Below we have one persons actual Facebook social graph captures in 2011.

As a Facebook user you can't login and see your social graph. Facebook’s interface is carefully designed to renders invisible the processes by which the company profits from users’ interactions. Like firms such as Google, Facebook invites its users to exchange their personal information for the value they gain from using the service. Its interface is designed to maximise social interaction in a way that also maximises profits for the firm. In the past era of industrial capitalism this kind of exchange would have been managed by contract. In the era of surveillance capitalism, companies like Facebook manage the exchange of social information primarily by designing a semiotic environment, the interface. These environments carefully and deliberately shape the behavioural options for users. They have become what some experts call “choice architectures”, conglomerations of algorithms, texts and images designed not to tell a user what to do, but to subtly solicit a desired behaviour (and populate Facebook’s ‘social graph’).

In essence, Facebook sells advertisers on the idea that their ads will reach potential customers with a high likelihood of buying their products. To ascertain that likelihood, Facebook asks advertisers to define potential customer segmentation metrics. At the same time, Facebook surveils its users, abstracting patterns of interaction among them, and developing them into a rich ‘topological space’ or social graph, but one that pays no attention to other aspects of the life of the underlying users. Facebook maps and quantifies patterns of interaction and explores their association with particular behavioural outcomes. By recognising patterns and calculating relationships in real time, Facebook is able to infer the probability that a certain segment of their user population will be drawn to a particular community, action or product. It is essentially this inference that they sell to advertisers.

If you are attracted to the ideas behind a social graph, and/or to the intriguing graphics, check out visual complexity which is all about visualising complex networks.

Developers can get access to Facebook’s social graph through a kind of ‘back-door’ using Open Graph and the Graph API. What a developer does is to markup their website so that the Facebook Crawler can capture the title, description and preview image of the content. Then they need to register and configure the app, and submit it for review. Beyond that Facebook provides lots of guidance and a full software development kit.

How can you see your ‘social graph’?

This section is reserved from those who want to access, copy, view and even analyse a Facebook ‘social graph’. These are just starters to point the reader at a few tools that are out there. Frankly with all the problems that Facebook, Twitter, etc. are facing I’ve no idea if these tools will still work in the future. They worked when I tested them in April-May 2018.

We will start with Netvizz, here is a useful how to video. Firstly you need to open Facebook with Chrome or Firefox (not Safari). Secondly you need to find and load the app Netvizz (I found version 1.45). We will select the ‘page like network’. You will need to go to the page ids (Lookup-ID.com) and use the target Facebook profile URL. I picked 'Spain Is Different' with the URL ‘https://www.facebook.com/spain.likemag' to obtain the numerical ID ‘seed’ 558169620983049. You input the ‘seed’ and the depth (1 or 2) and ‘start’. This will download a zip file entitled pagenetwork_nnnn….zip which you unzip to obtain a .gdf file. Now you will need to visualise the ‘social graph’ with, for example, Gephi. Opening Gephi and opening the .gdf file will produce a rather simple ‘social graph’ for this particular example.

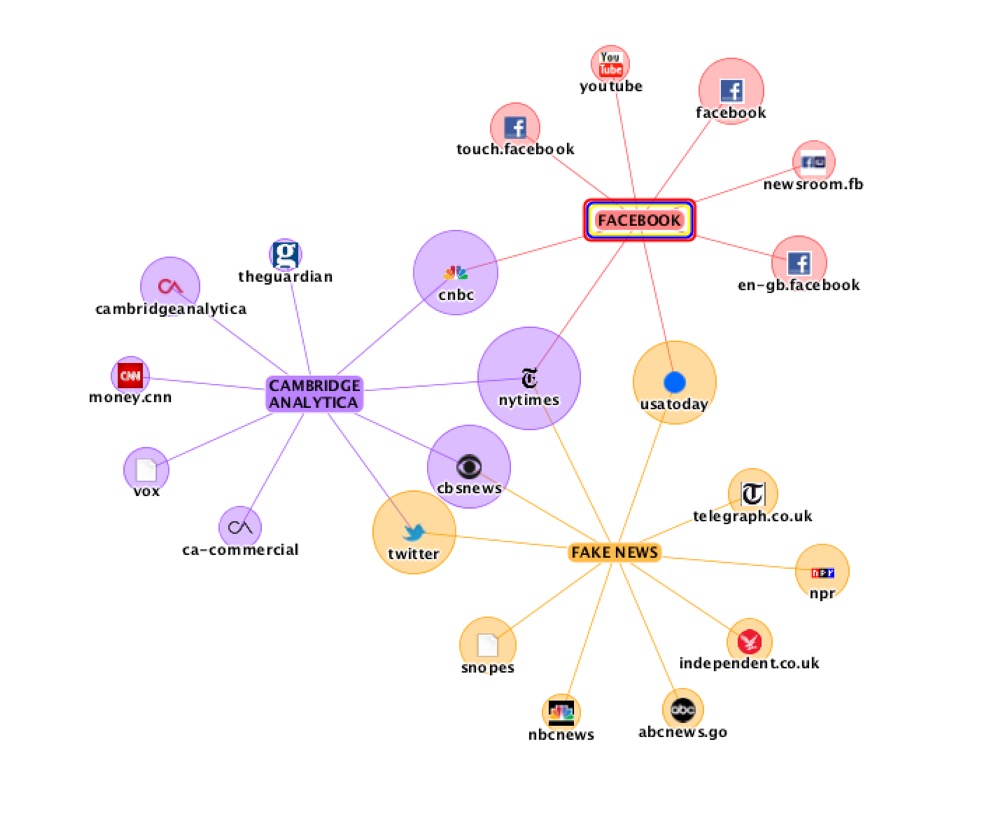

Next we have TouchGraph which is a visualisation and analytics tool, but one that can be integrated with consulting services. A simple trial produced the below visualisation of three terms, which in itself can lead to some interesting questions, e.g. why is it that in their recent articles only the New York Times still links 'fake news' with both Cambridge Analytica and Facebook?

TouchGraph's Facebook Browser (i.e. TouchGraph Photos) which in the past could visualise your friends and their shared photographs is no longer available.

The next one is NameGenWeb which now produced a blank page "due to changes to the Facebook API and the lack of funding to support continued development, we regret to announce that NameGenWeb will be offline for the foreseeable future".

Then we have Friend Wheel which already in September 2015 had to close down due to a change in the Facebook API caused by a revision of their privacy rules.

Facebook Friends Constellation was also a tool used to visualise relationships on Facebook, but it has completely disappeared.

Meurs Challenger is also a graph visualisation tool which still offers a 'friends visual map' for Facebook (I was not able to check it out because I refuse to have a 'flash player' on my computer).

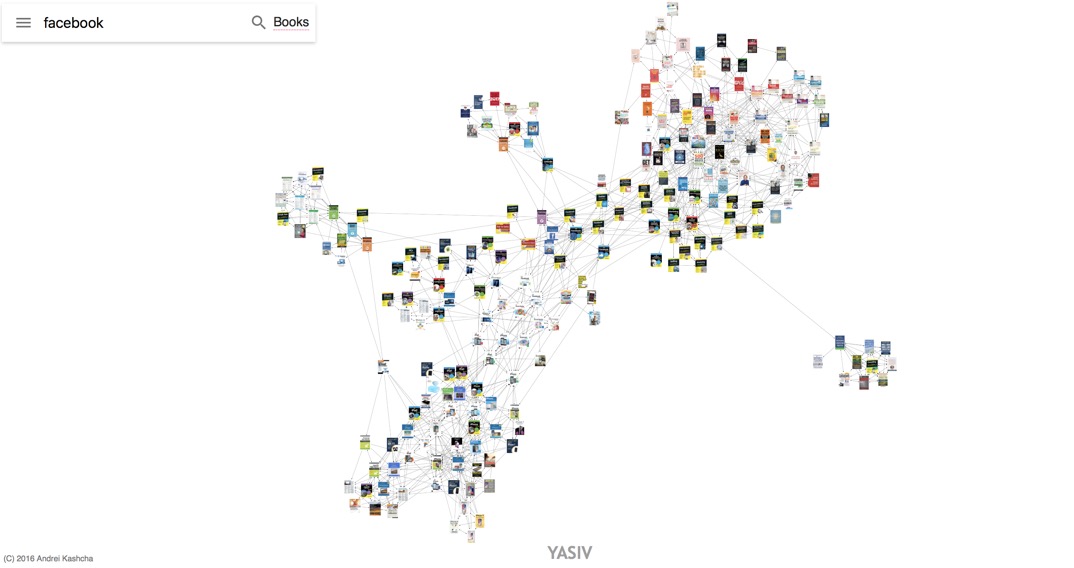

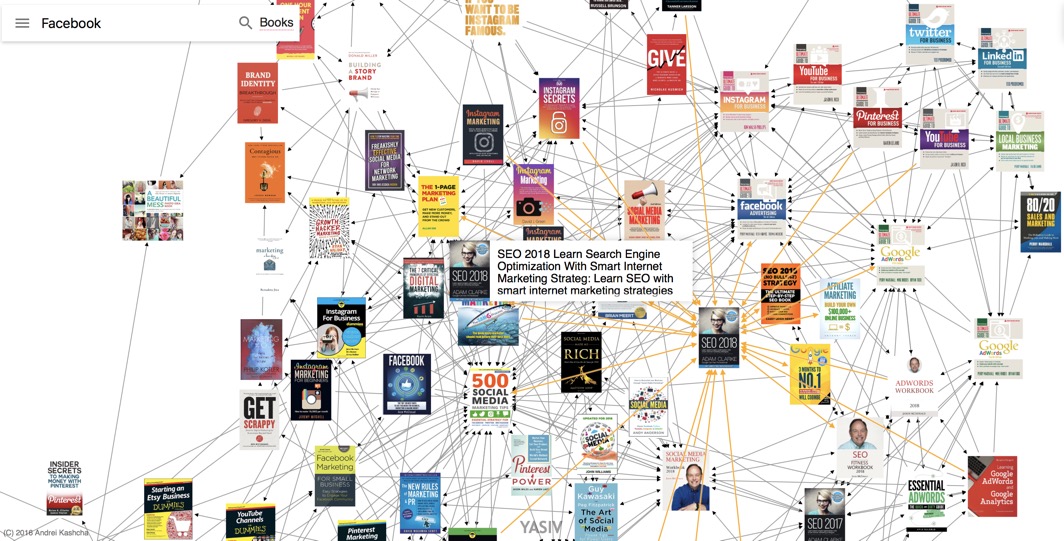

Interestingly www.yasiv.com once offered a '/facebook' option, but 4 years ago Facebook 'deprecated' the programming interface. On the other hand Andrei Kashcha still has his graphing software for Amazon books and YouTube videos. These are really useful, for Amazon the images below link together 224 books related to the term 'facebook'.

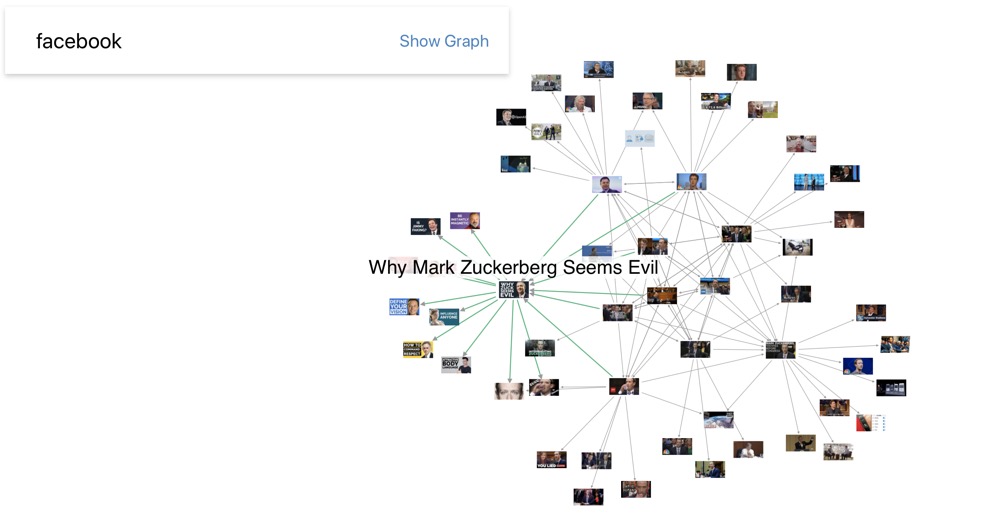

Below www.yasiv.com also has a YouTube application which provides a graph where each connection means that according to YouTube the video's were related.

Along with tools that create visualisations of ‘social graphs’ there are also ‘scrapers’, i.e. programs that can access and collect all sorts of data held in Twitter, Facebook, etc., and store the data in text or spreadsheet files for further analysis. Facepager is made for fetching public available data from Facebook, Twitter and other JSON-based APIs. All data is stored in a SQLite database and may be exported to a CSV file. This is a basic guide and this is its Facebook page. There are a couple of useful videos, here and here.

My impression is that Facebook will look to increasingly funnel (and authenticate) developers through their facebook for developers, which provides a vast range of tools and analytics. As a developer they can register and manage the login of their users, use Messenger to 'engage' with them, use webhooks to keep up to date on how they are making changes to their own Facebook settings, etc., and (naturally) accept in-app payments through Facebook's secure payment system. Developers can exploit the Facebook Instant Gaming platform, their API Center, the Instagram API, and the Facebook Marketing API. And when everything is working they can use Facebook Analytics to better service their users, measure ad performance, and build new 'audiences' for ad targeting.

It is certainly far more intuitive for someone looking for a product or service to use Google, Amazon or eBay. Facebook is an entirely different proposition. People don't go to Facebook to buy something or to research a particular service. It all starts with a supplier who already has a functional website up and running, offering and selling products and/or services, and already able to customise engaging content for different new audiences. What Facebook offers is a way to target (or re-target) those niche audiences and to funnel them through to specific parts of the website. Everything starts with the 'warm audience'. They already know the product or service, they are on customer lists, have already visited the website, and are Facebook fans. They are a kind of baseline experiment for promotions, providing data on what's performing best. With the knowledge of the existing audience segments (those who are the most engaged and who make the most purchases), Facebook will target lookalike audiences, and again the key is to collect data from these 'cold audiences'. It is vital that the existing website is able to customise an attractive offer for new niche audiences. If true, it can be marketed to Facebook Groups and Facebook Pages. It is pointless funnelling through interested users from Facebook if the website does not deliver.

Now Facebook comes into it own. Facebook can be instructed to show a particular landing-page ad to all those people who looked at a particular blog post, but who had not visited the website of the product or service. Remember the ad takes the people to a part of the website with a customised offer. Facebook can be instructed to run a different ad (say for a discount or free trial) for all those people who visited the landing-page but who did not 'convert'. Facebook can also be configured to show an ad with trust-building testimonials to all those people who refused the discount or special offer. Facebook can also be configure with a 'hard-sell' ad just for those people who took up the special offer but still did not 'convert'.

For those reader who are into marketing jargon, what the website of a product or service does is to focus on a brands existing 'touchpoints' that shape the customer's perception of the brand. The 'touchpoints' can be almost anything - product specification and range, pricing, packaging, branding, website, cross-sell, up-sell, customer service, billing, manuals and instructions, events, newsletters, surveys, loyalty programs, promotions, gifts, testimonies, customer blog, trade shows, technologies, environmental considerations, legal issues such as privacy, regulatory requirements, safety, on-line support, training, delivery, cost of ownership, etc. Again the importance is that Facebook is used to funnel new niche audiences into an existing (and hopefully efficient and successful) web-based marketing and sales machine. And more importantly still the whole Facebook funnel is automated.

Facebook and social science research

Much of the story about Cambridge Analytica and Facebook depends upon extracting data sets to support social science research. Over the years researchers focussed on Twitter more so than on Facebook. Fundamentally Twitter is just easier to understand and use. Tweets are a simple primary unit of Twitter, whereas Facebook has no single message primary unit. Because Facebook is designed from the bottom up to scale it is both conceptually and technically complicated and highly granular. And in the past Facebook was well known for it poorly documented privacy setting, making it difficult for researchers to known what they could and could not access and use. Even the Facebook Graph API was poorly documented, and known to be unreliable for large or complex requests. Those researchers who had accessed Twitter in the past found Facebook complicated and difficult to navigate (have a look here for a paper from 2013 telling us that Twitter was the Drosophila of social media research). For example, in the Facebook social graph nodes can be users or pages, and can have different fields and edges. What should a researcher collect, and what other connected nodes should be collected as well? Collect everything, you say. But be careful. As an example, retrieving a page on a famous person with their many associated photographs, videos, posts, comments, etc. actually took nearly 50 hours (2018 time), when including their friends profiles as well. The problem is that the request process is complex. An optimised request for the same information later took ‘only’ 87 minutes. It is true that a request to download and backup a user profile usually only takes a few minutes, but that does not include downloading the profiles of all their friends or the history of their extended comments and discussions, etc. Even in the past quite a lot of data would normally be private, although a lot of other information is public (e.g. comments) and can also contain sensitive information. In the case of Cambridge Analytica and Facebook it would appear that Cambridge Analytica were allowed access to a lot of private data, and that Facebook either ‘turned a blind eye’ or that their privacy rules were not enforced or were easy to circumvent. Give the lack of clarity of Facebook’s rules at the time it would appear that they allowed third-party apps to collect data about Facebook user’s friends, but this has changed now. It looks like they simply did not enforce their rules, and that meant that Cambridge Analytica just went ahead and used the data they had scraped from Facebook for non-academic purposes. Naturally all parties have expressed differing and contradictory opinions on what happened.

This will be short, but I would like to log a plea for the use and collection of social science data. Archives are a necessary social institution, some are national, others of film, some institutional, and some collect social science data (e.g. the UK Data Archives). Archives contain primary source documents that are presumed to be of enduring cultural, historical, or evidentiary value. Web archiving is essentially the same in that portions of the World Wide Web are preserved for researchers, historians, and the public.

The social sciences must collect a massive amount of data from social surveys through to health statistics and market research data. A lot of data is collected using public funds (e.g. environmental research, space science,…), and data archival is mandatory. Other types of digital data on such things as drug development or construction projects must also be archived for regulatory and insurance purposes. Would anyone be able to understand the details of the Trump presidency without an archive of his Twitter account? But this is not just about ‘data stewardship’, curation and ‘digital preservation’ of the past, since the analysis of this data also helps in the formulation of future public policies. For example, Twitter data has been used to predict riots, and Facebook has been use to understand eating disorders in adolescent girls, and how it enhances and undermines psychosocial constructs related to well-being.

I just hope that the fallout from the Cambridge Analytica and Facebook affair does not adversely affect the archival and use of data necessary to the social sciences.

Facebook news services (fake or otherwise)

Facebook Watch was launched in 2017 as a video-on-demand service. In part they wanted to compete with the video sharing platforms YouTube and Snap, in part they were looking to own and control original content, and in part they wanted to manage better the quality of news content being shared on their platform. No matter how you look at it, initially this was all about monetisation with Facebook sharing advertising revenues. In fact Facebook continued to say that people come to them to ‘see’ friends and family, not news. But the reality is that family and friends increasingly share news items. In 2017 Facebook admitted that they did not think that the way they focussed on dealing with the problems of ‘fake news’ and news integrity was time well spent. They were looking for new ways to confront ‘head on’ fake news and content that “violated community standards”. Now in 2018 their approach has changed again, and after again stressing that they are not a media company, their algorithms will now prioritise personal moments shared between friends and family, and focus less on news as a service. Facebook claimed in 2016 that they could squat fake news but where is the proof? In any case the changes they have made to Watch might be just a way to turn their back on the fake news problem, but it is also a more frontal attack on the YouTube market. The new Facebook Watch revenue model is now similar to that of YouTube, where content creators can upload their content free, and earn a cut in the revenue from ads placed on that content. Here is an interesting timeline of the history of Facebook’s Newsfeed algorithm.

Facebook has also recently upgraded its ‘info’ icon button, which provides more information about an article and the publisher, and users will be able to see which of their ‘friends’ shared fake news so they can ‘unfollow’ them. Most experts think that this is not going to be enough. The reality is that social networks live off advertising. They create ‘like-minded’ groups, aggregate user attention, and then sell that on to advertisers. Those who create fake news and want to propagate disinformation want to manipulate the behaviour of like-minded groups. So their objectives are subtly and unwittingly aligned.

Disinformation is not what you think

It would be a mistake to think that disinformation campaigns are limited to shovelling fake content onto Facebook, Google or Twitter. Digital advertising and marketing exploits precision online advertising, social media bots, viral memes, search engine optimisation, and intelligent message generation for different micro-targets. Modern day advertisers use these techniques, and disinformation operators are replicating all the same techniques. The interests of social media networks and advertisers are aligned, but the problem is that disinformation operators are typically indistinguishable from any other advertiser. Social networks capture every click, purchase, post and geo-location. They aggregate the data, connect it to individual email addresses or phone numbers, build consumer profiles, and define target audiences. Precision advertising is a kind of in-vivo experiment. Target audiences are not static, and the modern advertiser is looking to drive sentiment change and persuade users to alter their perception of a given product or service. Systems allow a kind of automated experimentation with thousands of message variations paired with profiled audience segments (and across different media channels). Advertisers also look to optimise search engine results and employ people who spend their time reverse engineering Google’s page rank algorithm. All the tracking data, segmentation, targeting and testing, and measures of relative success and failure are grit for machine learning algorithms. Disinformation operators want the same thing, precise audience targeting for their message. The way precision advertising can drive popular messages into becoming viral phenomenon is also exactly what a disinformation operator wants as well. They also want to see their messages on the top of Google’s search pages. But there is a key difference. Google can usually detect something is not right, and within hours they can correct for any distortions. But if it happens just before an election and the message goes viral, a few hours could be too late.

This webpage is all about Cambridge Analytica and Facebook, and how user data from Facebook was used to profile and target specific ‘like-minded’ groups. But we must not forget that the other side of the coin is the content, fake or misleading, which is used to ill-inform or disinform. The key is to coordinate lots of information, made quickly, made together, and all selling the same message.

Alexa, and Amazon company, provides a set of analytic tools, including providing traffic estimates for specific websites. So why can one lowly ranked site come out on top of a Google search?

You start with a strong message, and be sure to put solid honest caveats in the footnotes, e.g. “no evidence yet…”, “yet to be proven”, “no evidence made available yet”. You often see “… they had not replied by the time of publication”, and “… did not respond to a request for comments”. True or not, this kind of caveat is often used in the mainstream media and even by good journalists, and gives a sense of investigative credibility to any text.

Some sources simply invent stupid stories, but even these stories have their role to play because they give credence to other stories that subtly masks or distorts the truth.

Texts are written in a way that are easy to read and above all compelling to share. To get people to share, authors flatter their readers and make them look well informed and smart, using ’new’, ‘recent’, etc. so that they want to get in first and share. Authors use popular language and re-use words and expressions used by the more mainstream media, even if they may define the terms differently. Again the key is to create an alternative narrative. Authors use keywords that are used by their target audience, i.e. they may love words like evidence, Trump, Russia, CIA, new, secret, proof, …, and authors will always write in a way that allows them to rapidly re-use the same texts and references in the future, thus creating consistency over time (backward consistency creates a sense of credibility). Synchronising content around those key words will help search engines pick up on them. The same basic message must be cut-and-pasted into multiple articles over dozens of websites, all at the same time. Authors will cross-reference their different websites together, using each as a reference for the other. And they try to make sure each of their websites is referenced abundantly by many other websites (that is why they have a lot of websites up and running on a whole variety of topics, so they can reference each other). Also authors will abundantly reference mainstream media, even if it is simply to say that they are just part of a corrupt government-business conspiracy. Authors must try to build conversations, and foster lots of comments, positive and negative (even if they have to write them themselves under pseudonyms). Now all they need to do is beam out their information to their micro-targeted demographics. That means publishing, and then updating and re-publishing, and re-writing, and tweaking, all because Google’s algorithms like newness. Even if the original news items are one week old, everything is written as if it is a breaking story.

And perhaps the most important things is that this type of disinformation is not targeting an opponent or adversary, it is designed to further attract, entrench or indoctrinate supporters (micro-targeted users, ‘like-minded’ communities, the tribe).

Check out this report by TIME and this by The Guardian, for a really good insight into the work of misinformation.

If you want to know what Facebook thinks of “actions to distort domestic of foreign political sentiment” have a look at their 2017 report Information Operations and Facebook.

Here we have an article on “Analysing the Digital Traces of Political Manipulation: The 2016 Russian Interference Twitter Campaign”, and the Harvard report on the 2016 US Presidential elections entitled “Partisanship, Propaganda, & Disinformation”.

Other authors look at the ‘larger picture’ with the 2016 report “Who Controls the Public Sphere in an Era of Algorithms?”, the 2017 White Paper “The Fake New Machine”, and the 2017 reports on “Computational Propaganda” and “Troops, Troops and Troublemakers: A Global Inventory of Organised Social Media Manipulation”.

Have a look at the recent and very extensive 2018 article “Media Manipulation 2.0: The Impact of Scale Media on News, Competition and Accuracy”, and the very recent (March 2018) opinion of the European Data Protection Supervisor on online manipulation and personal data.

If your are looking for a more technical perspective, try reading these articles, “The Ethics of Automated Behavioural Microtargeting, “Can We Trust Social Media Data? Social Network Manipulation by an IoT Botnet”, “The State of Fakery” and “On Cyber-Enabled Information/Influence Warfare and Manipulation”.

No matter how you look at it identifying ‘fake news’ is difficult

We must be honest with ourselves, identifying fake news is a challenge. The Gartner Group recently predicted that by 2022 the majority of people in advanced economies will see more false than true information. In fact there is something called The Fake News Challenge run by volunteers in the artificial intelligence community. The first challenge was simply to identify whether two or more articles were on the same topic, and if they were, whether they agreed, disagreed, or just discussed it. Talos Intelligence, a cybersecurity division of Cisco, won the 2017 challenge with an algorithm that got more than 80% correct. The top three teams used deep learning techniques to parse and translate the texts. Not quite ready for prime time, but still an encouraging result. The reality is that given the limitations in language understanding these techniques are likely to be used as tools to help people track fake news faster. Future challenges will look at more complex tasks such as images with overlay fake news texts. This technique has recently been introduced by sites that harvest ad dollars after new controls were introduced by Google and Facebook.

But will it be enough? You can try to identify, label and choke off fake news suppliers, but nothing is done about those who consume fake news. The same Gartner report predicted that growth in fake content will continue to outpace artificial intelligences ability to detect it.

Yet the ongoing premise is that humans will not be able to detect and disarm today’s weaponised disinformation, so we need to look to machines to save us. Individuals might not worry too much about the truth of some stories, we have a multitude of ways to filter out fake news and a multitude of ways to want to believe in what fake news is telling us. But content platforms and advertisers may have something to lose by hosting or being associated with fake news. They can try to cut off revenue streams for those who create fake news, but they need some automated tools (Trive might be one way forward). These tools can start to identify phoney stories, nudity, malware, and telltale inconsistencies between titles and texts. Sites can be blacklisted, image manipulation identified, and texts checked against databases of legitimate and fake stories. One problem is that machine learning techniques are also being use to create ever-more convincing fakes. The smartphone app called FaceApp can automatically modify someone’s face to add a smile, add or subtract years, or even swap gender. It can smooth out wrinkles, and even lighten skin tones. Lyrebird can be used to impersonate another person’s voice. In 2016 Face2Face demonstrated a face-swapping program. It could manipulate video footage so that a person’s facial expressions matched those of someone being tracked using a depth-sensing camera. Different but related to this, another research project showed how to create a photo-realistic video of Barack Obama speaking a different text (right down to convincing lip sync and jaw/muscle movement). It is early days, but these types of technologies go beyond just fake news issues and actually challenge the meaning of juridical evidence.

Some experts tell us not to overreact. One study showed that fake news during the 2016 Presidential elections was widely shared and heavily tilted in favour of Donald Trump. Whilst more than 60% of US adults got news from social media, only 14% of them viewed that media as their most important source of election news. At the end of the day the study concluded that the average US adult saw and remembered just over one fake news story in the months before the election, and that the impact on vote shares was probably less than 0.1%, i.e. smaller than Trump’s margin of victory in the pivotal states.

The Tide Pod Challenge

Eliminating fake news is a challenge. How do you stop fake news from spreading and mutating, from jumping from one platform to another, given that what makes an idea outrageous and offensive is what makes it go viral in the first place? As early as 1997 kids were playing the competitive milk chugging challenge, i.e. to drink one US gallon (3.8 litres) of whole milk in 60 minutes without vomiting. Then in 2001 there was the cinnamon challenge, i.e. eat a spoonful of ground cinnamon in under 60 seconds without drinking anything. And upload the video to YouTube. These challenges carry substantial health risks. Salt and ice is another challenge, as is the Banana Sprite challenge.

In 2015 The Onion, a US satirical newspaper, published “So Help Me God, I’m Going to Eat One of Those Multicoloured Detergent Pods” from the perspective of a strong-will small child. The reality is that children do eat brightly-coloured sanitisers, deodorants, and detergent pods. After that people who should know better started to eat the pods. In early 2018 the “Tide Pod Challenge” swept across the Internet. People posted YouTube videos of themselves eating Tide Pods and videos of those challenged to eat one (it is both disgusting and toxic).

In the spirit of fake news we must ask at what point should YouTube and Facebook start taking down Tide Pod content? Some videos warn people not to eat Tide Pods, others are awash with irony, yet others are jokes and present juicy Tide Pod pizza. Yet other videos actually show people eating the Tide Pods. How to provide moderation guidelines, or program automated filtering software? And we should never forget that taking down videos can actually draw more attention to them (Streisand effect).

You ‘solved’ the Tide Pod problem, and now comes Peppa Pig drinking bleach. Stuff like this is neither innocent, nor knee-jerk repulsive. It can easily attract some apparent interest, which can as easily get amplified. If the recommender systems ‘think’ people are interested, then other people will start automatically to remix new pieces of content. It is spam, but no longer is it designed to sell you something. Your attention is the new commodity. Your attention is sold back to the social media platform’s ad engine and someone else has to try and see how to make money on Peppa Pig going to the dentist.

You think that all this is just fantasies on the Internet? As an exercise have a look at Pizzagate, which connected several US restaurants with high-ranking officials of the Democratic Party, and an alleged child-sex ring. In June 2017 Edgar Welch was sentenced to 4 years for firing an assault rifle in D.C.’s Comet Ping Pong pizza restaurant after he had watched hours of Youtube videos about Pizzagate. He said he was convinced that the restaurant was harbouring child sex slaves. Fortunately no one was hurt.

As with any complex, dynamically changing and partially 'intransparent' technology some unanticipated consequences are inevitable, and some even may be desirable. The Internet, social networks, and even advertising are not in themselves perverse technologies, but ignorance and mistaken hypotheses can create situations which are unacceptable to society. Ignorance we can (must) address by increasing our understanding of both the technology and its inherent characteristics. Given that unanticipated consequences are inevitable in all activities, our objective must be to try to reduce both short-term and long-term uncertainty (but that will always have a cost). We may want to slowdown technological progress, or hold things up whilst we carry out further studies, analysis, and experiments. We may think that any long-term ‘solution’ will cost too much time and money, so we aim for short-term ‘quick fixes’. But those quick fixes could cost more in the long run. For example we know fake news devalues and delegitimises expertise, authoritative institutions, and the concept of objective data. The long-term consequence could be that society’s ability to engage in rational discourse based upon shared facts in undermined. We can look to take out some kind of ‘insurance’ by bring an industrial sector together to cover the catastrophic consequences of their collective actions. This could include self-regulation with ‘disputed’ and ‘rated false’ warning banners, possibly inserted by a new type of independent, trusted gatekeeper. We can look to control, regulate or guide an industrial sector. We can legislate against certain practices. This will have financial consequences and will also reduce their/our freedoms. Or should we look at fake news as a symptom of much deeper structural problems in our media environment? Perhaps we have to accept that popularity, engagement, and ‘likes' and ‘shares’ are now more important that expertise and accuracy.

US indictment of Russians 2018

In March 2018 we had the US announcing criminal charges against Russians who interfered in the 2016 elections, and Facebook telling us that the Russians abused their system. The indictment mentions 41 times the use of Twitter, Youtube and Facebook to “divide America by using our institutions, like free speech and social media”. At one level the idea is to cause confusion, distrust, and sow division, but equally it is stated in the indictment that the Russian Internet Research Agency (also known as the Trolls from Olgino, one of Russia's Web Brigade) employed a dozen full-time staff and spent $1 million a month to try to ensure that Clinton was not elected. A particular feature of this interference was their targeting of minority groups to encourage them to stay away from the polls, on the basis that neither Clinton nor Trump were worth it.

This article includes the video of the indictment, and naturally many people have pointed out that the US is no stranger to doing the same. Time has pointed out that the Russians are also targeting the US 2018 election cycle. Time has also noted that Russian intrusions in to the 2016 US elections was more extensive than originally reported.

The problem today is that the misuse of social networks may be banned as a matter of policy, but social networks are actually designed to share freely and openly peoples ideas, comments and opinions, and to do so rapidly and globally. Ads could be used to target people, for example asking them to follow a Facebook page on Jesus. Later they would use that group to spread the meme of Hillary Clinton with devil horns. Facebook itself estimated that 10 million people saw that paid ad, but that 150 million people saw the meme generated from fake accounts. During the 2016 elections the six most popular Facebook pages of the Russian Internet Research Agency received 340 million ‘shares’ and nearly 20 million comments, etc. The reality is that Facebook algorithms supported and aggressively marketed the micro-targeting used by the Russians because they are designed to focus on and support very active social and political discussion, divisive or otherwise.

According to a Wired article the Russians did not abuse Facebook, they simply used it in the way it was designed to be used.

Others have echoed this analysis. Social networks such as Facebook are designed to promote certain types of content. An idea that can motivate people to share it will always thrive and spread (it is the definition of viral content). Content creators optimise their content for sharing, and social networks optimise for advertising revenue. Social networks are easy to access and sharing content is almost instantaneous and on a global scale. On top of all that the social networks try to personalise content, meaning that whatever is good or bad gets into the hands of people who are most likely to find it appealing and worth sharing. So the underlying structure of social networks implies that they will forever go from one crisis to another. Removing one type of unacceptable content due to one crisis, will just be followed by the next content-crisis. Russian content trolls were not the first ‘baddies', and won’t be the last.

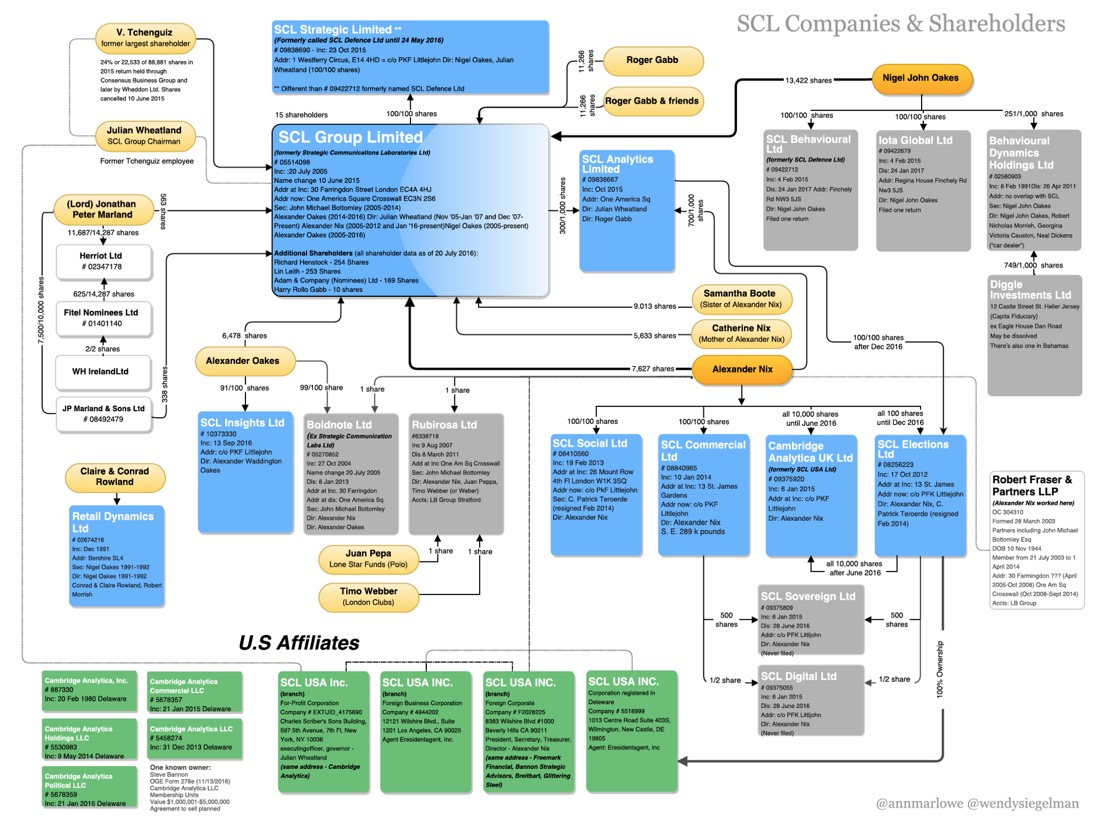

So who exactly is Cambridge Analytica?

According to their own website Cambridge Analytica “use data to change audience behaviour”, and they have two divisions: Commercial (data-driven marketing) and Political (data-drive campaigns). Their core skills are market research, data integration, audience segmentation, targeted advertising and evaluation. Wikipedia tells us that Cambridge Analytica belongs to the SCL Group which is partly owned by Robert Mercer. Mercer is a US-born ex-computer scientist, the co-founder and now ex-CEO of the hedge fund Renaissance Technologies, and part-owner of the US-based far-right Breitbart News. Wikipedia tells us that Mercer was (and probably still is) one of the most influential billionaires in US politics. He is known to have supported US Republican political campaigns, the Brexit campaign in the UK, and the recent Trump campaign for the US presidency.

What did Cambridge Analytica do wrong?

In simple terms they are accused of collecting and exploiting Facebook user data from about 50 million people without getting their permission. According to this article in 2014 Cambridge Analytica promised Robert Mercer and Stephen K.Bannon (responsible for making Breitbart News a platform for the alt-right) to “identify the personalities of American voters and influence their behaviour”. To do this they harvested personal data from Facebook profiles for more than 50 million Americans without their permission. This ‘data leak’ allowed Cambridge Analytica to exploit the private social media activity of a huge group of the American electorate, developing techniques that underpinned their work on Trump’s US presidential campaign in 2016.

When did it all start?

According to the mainstream press (the ‘simple' story) it is supposed to have all started in 2014 with the Amazon mechanical turk. This is a marketplace that brings clients and workers together for a range of data processing, analysis, and content moderation tasks (it takes its name from a late-18th C fake chess-playing Turk machine). The story goes that a task was posted by “Global Science Research” offering ‘turkers’ $1 or $2 to complete an online survey. But they were only interested in American ‘turkers’ and they had to download a Facebook app which would “download some information about you and your network … basic demographics and likes of categories, places, famous people, etc. from you and your friends”. This request was suspended in 2015 as a violation of Amazon’s terms of service. In fact some of the ‘turkers’ noticed that the app took advantage of Facebook’s ‘porousness’ by collecting everything that they wrote or posted with their friends. It is claimed in several reports that Facebook provided tools that allowed researchers to mine the profiles of millions of users. At least until 2014 Facebook allowed third-party access to friends’ data through a developer's application, a kind of back-door to the Facebook social graph. New privacy setting were introduced, but some reports clearly suggest that Facebook continued to encourage third-party data ‘scraping’. It is true that Facebook later asked Dr. Kogan and Cambridge Analytica to delete the data they had collected. The problem was that Facebook did not police how their data was collected and used by third-parties, and did not check to see if their rules were being respected.

Why did Global Science Research want to collect all this data?

Firstly, they were a UK-based company doing market research and public opinion polling. Incorporated in 2014, it was voluntarily dissolved in October 2017. The company founders were a US citizen called Dr. Aleksandr Spectre and another US citizen Joseph Andrew Chancellor. Chancellor was a post-doctoral researcher in the University of Cambridge, and is now a quantitative social psychologist on the User Experience Research team of Facebook.

In fact as far as I can tell two companies were created and later dissolved. One called Global Science Research registered in 29 Harley Street, London (an off-shore address for more than 2,000 companies), and the other Global Science (UK) registered at Magdalene College in the University of Cambridge.

Spectre was 'temporally' the name of Dr. Aleksandr Kogan. In 2015 he married a Singaporean named Crystal Ying Chia and together they changed their family name to Spectre (they divorced in 2017). There is ample evidence to show that they picked Spectre as a derivative of Spectrum, since they were married in the international year of light (so forget all those Jame Bond references). Another source noted that he has now changed his name back again to Kogan. However, at this moment in time Dr. Aleksandr Spectre is registered as a research associate in the Department of Psychology in the University of Cambridge. His research interests are in prosocial emotional processes, and in particular the biology of prosociality, close relationships and love, and positive emotions and well-being. He is also mentioned as being a member of the Behavioural and Clinical Neuroscience Institute, part of the Department of Psychology. He has a separate blog on Tumblr where he mentions his research interests in well-being and kindness. He also mentions being the founder/CEO of Philometrics, a survey company that claims to be able to automatically take a survey with 1,000 responses and forecast how another 100,000 people would answer the same survey.

Also at this moment in time Dr. Aleksandr Kogan has a separate page under Neuroscience in the University of Cambridge (also in the Department of Psychology). He also appears on a different page for Cambridge Big Data.

There are numerous sources telling us that Dr. Aleksandr Kogan worked for Cambridge Analytica. They say that he harvested personal data from millions of Facebook users and passed that information on (I presume to Cambridge Analytica). They state that much of the information was obtained without users consent. It is also said that Cambridge Analytica created psychological and political profiles of millions of American voters, so that they could be targeted as part of the Trump 2016 presidential campaign. Facebook has suspended SCL Group, Cambridge Analytica, and Dr. Kogan. There is a privacy class-action lawsuit open against Cambridge Analytica and Facebook, and Facebook (and Zuckerberg) is being sued by shareholders in separate class-action lawsuits claiming that executives and board directors failed to stop the data breach or tell users about it when it happened, thus violating their fiduciary duty.

However, the University of Cambridge has made a written statement about Dr. Aleksandr Kogan and his work in the Cambridge Prosociality and Well-Being Lab (in fact he is the lab’s director). It confirms that he owned Global Science Research and that one of his clients was SCL, the parent of Cambridge Analytica. It states that none of the data he collected for his academic research was used with commercial companies. The situation appears to have been that he developed a Facebook app for collecting data for academic research. But that the app was repurposed, rebranded and released with new terms and conditions by Global Science Research. It also mentions that Dr. Kogan also undertook private work for the St. Petersburg University in Russia.

The Mail Online from the 3 April 2018 questions this simplistic view. They noted that some of Dr. Kogan’s colleagues disapproved of his research, and that the situation was not as clear-cut as suggested in the above written statement. It would appear that Cambridge Analytica paid Global Science Research £570,000 to develop a personality survey called This Is Your Digital Life. They paid between £2.10 and £2.80 to each of 270,000 US voters to complete the survey via a Facebook app. This part of the story appears to overlap with the payment made to ’turkers’. Different reports suggest that the original idea was to create an app called ‘thisisyourdigitallife’ that offered Facebook users personality predictions, in exchange for accessing their personal data on the social network along with some limited information about their friends, including their ‘likes’. In any case the app did harvest information from Facebook profiles of those users friends’, meaning that data on more than 30 million people was collected (this figure was upgraded to 50 million in numerous articles, and most recently upgrade again to 87 million). The Mail Online noted that Dr. Kogan thought that he was acting perfectly appropriately and now was being used as a scapegoat by both Facebook and Cambridge Analytica. In addition he thinks that the data collected was not that useful, and that Cambridge Analytica was exaggerating the accuracy and trying to sell ‘magic’. It must be said that other psychologists in the University of Cambridge are of a different opinion. They claim that findings show that accurate personal attributes can be inferred from nothing more than Facebook ‘likes’.

Reports and articles differ in the details

Some news reports suggest that Dr. Kogan and Global Science Research were paid by Cambridge Analytica to collected the data on millions of people. Other reports suggest that the original app was modified and then used by Cambridge Analytica to collect that same data. Yet other reports say that the original app was created as a research tool, but then transferred to Global Science Research. There it was renamed and the terms and conditions changed before being used by Cambridge Analytica. Cambridge Analytica is on record as saying that it did not use Facebook data in providing services to the 2016 Trump presidential campaign. Global Science Research is on record as saying that the users of the Facebook app were fully informed about the broad scope of the rights granted for selling and licensing, and that Facebook itself raised no concerns about the changes made to the app. The whistleblower Christopher Wylie (more about him later) suggests that Facebook did most of the sharing and they were perfectly happy when academics downloaded enormous amounts of data.

An article in The Guardian states that Dr. Kogan and Facebook were sufficiently ‘close’ that Facebook provided him in 2013 with an anonymised, aggregated dataset of 57 billion Facebook friendships, i.e. all friendships created in 2011. The results on the "wealth and diversity of friendships” were published in 2015.

Deep Root Analytics

For a moment we are going to turn away from Cambridge Analytica and Facebook, but we are not finished.

Deep Root Analytics looks at media consumption and offers TV targeting technology, i.e. helps companies make better ad buying decisions. The company was founded in 2013 by Sara Taylor Fagen (former aide to George W. Bush), Alex Lundry (a data scientist and micro-targeter) and TargetPoint Consulting. TargetPoint Consulting was founded in 2003 by Alexander P. Gage, creator of political micro-targeting. TargetPoint were exclusive data suppliers to the Bush-Cheney ’04 campaign. Deep Root Analytics was to improve micro-targeting for web-enabled media. In June 2017 Deep Root’s political data for more than 198 million American citizens (ca. 60% of the US population) was found on an insecure Amazon cloud server. At the time they were working for the US Republican National Committee on a $983,000 contract. The data was a compilation and included information from a variety of sources, including from The Data Trust, the Republican party’s primary voter file provider. Data Trust received $6.7 million from the Republican Party in 2016, and its president is now Trump’s director of personnel. Other contributors have been identified as i360, The Kantar Group, and American Crossroads. The 1.1 terabytes of data included names, addresses, dates of birth, voter ID, browser histories, sentiment analysis and political inclination, etc., but not social security numbers. It also included peoples suspected religious affiliation and ethnicity, as well as any positions they might have on gun ownership, stem cell research, eco-friendliness, the right of abortion, and likely (predictive) positions on such things as taxes, Trump's “America First” stance, and the value of Big Pharma and the US oil and gas industry. It must be said that much of the data originally was used to understand better local TV viewership for political ad buyers. All modern-day political organisations collect bulk voter data to feed their voter models. TragetPoint, Causeway Solutions and The Data Trust worked for the Republican Party, but other companies such as NationBuilder and Blue Lab work for the Democrats. Some of the data such as voter rolls are publicly available, other data was created by proprietary software. In fact Obama’s 2012 campaign also collected information from Facebook profiles and matched the profiles to voter records. The key problem is that Deep Root Analytics failed to protect the data. It must be said that the data left on the server was mostly from 2008 and 2012, and even today the 2016 data is considered by experts as ’stale’. A class-action lawsuit has been filed against Deep Root Analytics.

Back to Cambridge Analytica and Facebook

Earlier on I wrote that it all started in 2014 with the Amazon mechanical turk. But I also noted that some of Dr. Kogan’s colleagues disapproved of his research. In fact this story goes back further than 2014.

In 2013 Michal Kosinski, also with the University of Cambridge, published a paper entitled “Private Traits and Attributes are Predictable from Digital Records of Human Behaviour”. The focus was on using Facebook ‘likes’ to automatically and accurately predict a range of highly sensitive personal attributes including: sexual orientation, ethnicity, religious and political views, personality traits, intelligence, happiness, use of addictive substances, parental separation, age, and gender. The information was collected through the voluntary participation of Facebook users by offering them the results of a personality quiz MyPersonality. The basic idea was that the app enabled users to fill out different psychometric questionnaires, including a handful of psychological questions such as “I panic easily” or “I contradict others". Based on the evaluation, users received a “personality profile” (individual Big Five values - see below on psychometrics) and could opt-in to share their Facebook profile data with the researchers. Firstly the research team was surprised by the number of people who took the test. Secondly they tied the results together with other data available from Facebook such as gender, age, place of residence, ‘likes’, etc. From this they were able to make some reliable deductions, such as one of the best indicators for heterosexuality was ‘liking’ Wu-tang Clan, a US hip-hop group. People who followed Lady Gaga were likely to be extroverts, and introverts tended to like philosophy. Each piece was too weak to provide any reliable prediction, but thousands of individual data points combined to allow some accurate predictions. By 2012 Dr. Kosinski and his team, based upon 68 Facebook ‘likes’, was able to predict skin colour (95% accuracy), sexual orientation (88%) and affiliation to the Democratic or Republican Party (85%). With lower accuracy they also tried to predict alcohol, cigarette and drug use, as well as religious affiliation, etc. They later claimed that 70 ‘likes’ were enough to outdo what a person’s friends knew, 150 what their parents knew, and 300 what their partner knew.

It is said that on the day that Dr. Kosinski published these findings, he received two phone calls. The threat of a lawsuit and a job offer. Both from Facebook.

Dr. Kosinski is now an associate professor at Stanford Graduate School of Business. As a quick follow-on, Facebook ‘likes’ became private by default, but many apps and online quizzes ask for consent to access a Facebook users private data as a precondition. Dr. Kosinski is quoted as saying that using a smartphone is like permanently filling out a psychological questionnaire. Every little data point helps, i.e. the number and type of profile pictures, how often users change them (or not), the number of contacts, frequency and duration of calls, etc. Even the motion sensor in a smartphone can reveal how quickly we move, and how far we travel (signs of emotional instability). Once the data collected, it can be ‘people searched’ to identify angry introverts, or undecided Democrats, or anxious fathers…

Dr. Kosinski has his own website where he points to the myPersonality Project, the Concerto adaptive testing platform, and the Apply Magic Sauce personalisation engine.

The Psychometrics Centre of the University of Cambridge has a whole page of psychological profile tests, ranging from MyPersonality 100 to MyIQ through FaceIQ and 2D Spatial Reasoning.

The other two authors of the Dr. Kosinski's 2013 paper were David Stillwell and Thore Graepel. Stillwell worked with Kosinski in Cambridge and is now deputy director of the Psychometrics Centre and lecturer in big data analytics and quantitive social sciences (see his 2017 video). Graepel worked in Microsoft Research in Cambridge, and is now Professor of Machine Learning at UCL and research lead at Google’s DeepMind (you can check out his work here as of 2012 in Microsoft Research). A number of Freedom of Information requests have been made to the University of Cambridge to get access to the research results. It would appear that the position of the university is that all the data belongs to Kosinski or Microsoft Research, or both.

It is said that Dr. Kogan wanted to commercialise Dr. Kosinski’s results, but he declined. Dr. Kosinski has also said that Dr. Kogan tried to buy myPersonality on behalf of a deep-pocketed company, SCL. After that Dr. Kogen then built a new app for his own startup Global Science Research. In 2014 data was harvested under a contract with Cambridge Analytica, and used to build a model of 50 million US Facebook users, including allegedly 5,000 data points per user. The app collected data for 270,000 facebook users, and with 185 friends per user, this represented 50 million full profiles. Of that 30 million profiles had enough information in them to allow a correlation with other real-world data held by data brokers and political campaigners. This meant that Cambridge Analytica could connect the psychometric Facebook profiles to 30 million actual voters. Facebook no longer allows such an expansive access to friends’ profiles using a simple API. It is still unclear what data was moved from the academic context to the commercial context in Cambridge Analytica, and what role Dr. Kogan play in that.